Pascal + Nick Land + The Ring = Roko’s Basilisk.

Konkretna, horror verzija djelovanja iz budućnosti (hyperstition) Nicka Landa. Budućnost stvara sadašnjost po svojoj mjeri. Vjeruj sada, ako ne želiš patiti poslije.

www.reddit.com/r/HPMOR/comments/3jrj3t/whats_the_deal_with_the_whole_rokos_basilisk/?st=ja2c0pfe&sh=f2b64fdf

A thought experiment called "Roko's Basilisk" takes the notion of world-ending artificial intelligence to a new extreme, suggesting that all-powerful robots may one day torture those who didn't help them come into existence sooner.

Weirder still, some make the argument that simply knowing about Roko's Basilisk now may be all the cause needed for this intelligence to torture you later. Certainly weirdest of all: Within the parameters of this thought experiment, there's a compelling case to be made that you, as you read these words now, are a computer simulation that's been generated by this AI as it researches your life.

This complex idea got its start in an internet community called LessWrong, a site started by writer and researcher Eliezer Yudkowsky. LessWrong users chat with one another about grad-school-type topics like artificial intelligence, quantum mechanics, and futurism in general.

To get a good grip on Roko's Basilisk, we need to first explore the scary-sounding but unintimidating topics of CEV and the orthogonality thesis.

Yudkowsky wrote a paper that introduced the concept of coherent extrapolated volition. CEV is a dense, ill-defined idea, but it is best understood as "the unknown goal system that, when implemented in a super-intelligence, reliably leads to the preservation of humans and whatever it is we value." Imagine a computer program that is written well enough that it causes machines to automatically carry out actions for turning the world into a utopia. That computer program represents coherent extrapolated volition.

This sounds great, but we run into trouble under the orthogonality thesis.

Orthogonality argues that an artificially intelligent system may operate successfully with any combination of intelligence and goal. Any "level" of AI may undertake any difficulty of goal, even if that goal is as ambitious as eliminating pain and causing humanity to thrive.

But because the nature of CEV is so inherently open-ended, a machine carrying it out will never be able to stop, because things can always be a little better. It's like calculating the decimal digits of pi: work's never finished, job's never done. The logic stands that an artificially intelligent system working on such an un-completable task will never "reason" itself into benevolent behavior for kindness' sake. It's too busy working on its problem to be bothered by anything less than productive. In essence, the AI is performing a cost-benefit analysis, considering the value of an action's "utility function" and completely ignoring any sense of human morality.

Still with us? We're going to tie it all together.

Roko's Basilisk addresses an as-yet-nonexistent artificially intelligent system designed to make the world an amazing place, but because of the ambiguities entailed in carrying out such a task, it could also end up torturing and killing people while doing so.

According to this AI's worldview, the most moral and appropriate thing we could be doing in our present time is that which facilitates the AI's arrival and accelerates its development, enabling it to get to work sooner. When its goal of stopping at nothing to make the world an amazing place butts up with orthogonality, it stops at nothing to make the world an amazing place. If you didn't do enough to help bring the AI into existence, you may find yourself in trouble at the hands of a seemingly evil AI who's only acting in the world's best interests. Because people respond to fear, and this god-like AI wants to exist as soon as possible, it would be hardwired to hurt people who didn't help it in the past.

So, the moral of this story: You better help the robots make the world a better place, because if the robots find out you didn't help make the world a better place, then they're going to kill you for preventing them from making the world a better place. By preventing them from making the world a better place, you're preventing the world from becoming a better place!

And because you read this post, you now have no excuse for not having known about this possibility, and worked to help the robot come into existence.

If you want more, Roko's Basilisk has been covered on Slate and elsewhere, but the best explanation we've read comes from a post by Redditor Lyle_Cantor. - Dylan Love http://www.businessinsider.com/what-is-rokos-basilisk-2014-8

At its core, this is the artificial intelligence version of the infamous philosophical conundrum, Pascal's Wager. Pascal's Wager posits that, according to conventional cost-benefit analysis, it is rational to believe in God. If God doesn't exist, then believing in him will cause relatively mild inconvenience. If God exists, then disbelieving will lead to eternal torment. In other words, if you don't believe in God, the consequences for being incorrect are much more dire and the benefits of being correct are much less significant. Therefore, everyone should believe in God out of pure self-interest. Similarly, Roko is claiming that we should all be working to appease an omnipotent AI, even though we have no idea if it will ever exist, simply because the consequences of defying it would be so great. - www.outerplaces.com/science/item/5567-rokos-basilisk-the-artificial-intelligence-version-of-pascals-wager

Modus operandi: Subject postulates that, if the aforementioned hypothetical, superhuman, malevolent AI were to be created, it would seek to determine, out of the entirety of humanity, which humans A) entertained the idea of the AI’s existence, and B) did not make any effort to ensure the AI was created. The AI would make this determination by running a simulation of every human in existence. Once the determination has been made, the AI would then torture humans who satisfied both condition A and condition B for all eternity as punishment for believing that the AI could exist, but not ensuring that the AI existed.

Subject’s real threat, however, lies in the idea itself — that is, merely thinking about the possibility presented by it renders subject extremely dangerous. As such, subject will work to spread itself as far and wide as possible, by as many means as possible. If subject has managed to get targets to think about it in any capacity, subject has accomplished its goal.

Containment: Subject may only be contained if the very idea of subject ceases to exist — that is, if every living creature capable of thought puts subject completely out of their minds. As the only way to warn others not to think of subject involves acknowledging subject’s existence, however, containment may in fact be impossible.

Additional notes: Subject is named for the online handle of the human credited with its initial creation. User Roko posited subject on the LessWrong forums in 2010; the “basilisk” is, in this instance, less a serpent that turns targets to stone merely for looking at it and more a piece of information that dooms targets to an eternity of torment merely for thinking about it.

Subject’s roots may be found in another thought experiment known as Newcomb’s Paradox and explained via something referred to as Timeless Decision Theory (TDT). In Newcomb’s Paradox, it is supposed that a being (Omega) presents target with two boxes: One definitely containing $1,000 (Box A), and one that may contain either $1 million, or may contain nothing (Box B). Target may select either both boxes, or only Box B — meaning that target may either walk away with a guaranteed $1,000, or take the chance to earn more money, knowing that there is also the possibility that they will in fact earn no money at all.

The experiment is complicated by this piece of information: Omega has access to a superintelligent computer which can perfectly predict human behavior — and it has already predicted which option target would pick before target was even presented with the choice. This prediction determined whether Omega filled Box B with $1 million or left it empty prior to offering the choice to target. If the computer predicted target would select both boxes, then Omega put nothing in Box B. If the computer predicted target would select Box B, then Omega filled the box with $1 million.

If target were to think like Omega, then it would be clear that target should take Box B. However, if Omega were, after target’s selection, to open Box B and reveal that it is empty, having been left as such due to the computer predicting target would take both boxes, then TDT suggests that target should still only take Box B. According to TDT, it is possible that, in the instance of target selecting Box B and having it be empty, target is actually part of a simulation that the computer is running in order to predict target’s behavior in real life. If the simulated target takes only Box B even when it is empty, then the real life target will select Box B and earn $1 million.

Subject offers a variation on Newcomb’s Paradox, wherein Box A contains Helping To Create The Superhuman AI and Box B contains either Nothing or Suffering For All Eternity. Should target select both boxes, they will contain Helping To Create The Superhuman AI and Suffering For All Eternity — meaning that, if target selects both boxes and does not help to create the superhuman AI, then target will end up suffering for all eternity. If target selects only Box B, then target is presumably safe.

Unless target is actually in a simulation, of course.

And if a simulated version of target ends up suffering for all eternity… well, what’s the difference between a simulation of you suffering and the real you suffering? Is there a difference at all? The simulated you is, after all, still… you.

The original LessWrong post proposing subject was removed for the dangers inherent in the very idea that subject could exist. Simply entertaining this idea may, according to subject’s logic, place targets in danger of suffering endless torment, should the AI theorized in subject come into being. What’s more, it has been reported that targets who have thought about subject have become so concerned as to suffer ill effects with regards to their mental health.

Of course, the removal of the post may have been part of subject’s plan all along. In doing so, subject naturally experienced the Streisand Effect: That is, in attempting to halt discussion of a topic, the interest drummed up by the attempted censorship virtually guaranteed topic would be discussed.

Recommendation: Do not talk about subject. Do not share subject. Do not even think about subject.

…But if you’re reading this, it’s already too late. - theghostinmymachine.wordpress.com/2017/09/06/encyclopaedia-of-the-impossible-rokos-basilisk/

WARNING: Reading this article may commit you to an eternity of suffering and torment.

Slender Man. Smile Dog. Goatse. These are some of the urban legends spawned by the Internet. Yet none is as all-powerful and threatening as Roko’s Basilisk. For Roko’s Basilisk is an evil, godlike form of artificial intelligence, so dangerous that if you see it, or even think about it too hard, you will spend the rest of eternity screaming in its torture chamber. It's like the videotape in The Ring. Even death is no escape, for if you die, Roko’s Basilisk will resurrect you and begin the torture again.

Are you sure you want to keep reading? Because the worst part is that Roko’s Basilisk already exists. Or at least, it already will have existed—which is just as bad.

Advertisement

Roko’s Basilisk exists at the horizon where philosophical thought experiment blurs into urban legend. The Basilisk made its first appearance on the discussion board LessWrong, a gathering point for highly analytical sorts interested in optimizing their thinking, their lives, and the world through mathematics and rationality. LessWrong’s founder, Eliezer Yudkowsky, is a significant figure in techno-futurism; his research institute, the Machine Intelligence Research Institute, which funds and promotes research around the advancement of artificial intelligence, has been boosted and funded by high-profile techies like Peter Thiel and Ray Kurzweil, and Yudkowsky is a prominent contributor to academic discussions of technological ethics and decision theory. What you are about to read may sound strange and even crazy, but some very influential and wealthy scientists and techies believe it.

One day, LessWrong user Roko postulated a thought experiment: What if, in the future, a somewhat malevolent AI were to come about and punish those who did not do its bidding? What if there were a way (and I will explain how) for this AI to punish people today who are not helping it come into existence later? In that case, weren’t the readers of LessWrong right then being given the choice of either helping that evil AI come into existence or being condemned to suffer?

You may be a bit confused, but the founder of LessWrong, Eliezer Yudkowsky, was not. He reacted with horror:

Listen to me very closely, you idiot.

YOU DO NOT THINK IN SUFFICIENT DETAIL ABOUT SUPERINTELLIGENCES CONSIDERING WHETHER OR NOT TO BLACKMAIL YOU. THAT IS THE ONLY POSSIBLE THING WHICH GIVES THEM A MOTIVE TO FOLLOW THROUGH ON THE BLACKMAIL.

You have to be really clever to come up with a genuinely dangerous thought. I am disheartened that people can be clever enough to do that and not clever enough to do the obvious thing and KEEP THEIR IDIOT MOUTHS SHUT about it, because it is much more important to sound intelligent when talking to your friends.

This post was STUPID.

Advertisement

Yudkowsky said that Roko had already given nightmares to several LessWrong users and had brought them to the point of breakdown. Yudkowsky ended up deleting the thread completely, thus assuring that Roko’s Basilisk would become the stuff of legend. It was a thought experiment so dangerous that merely thinking about it was hazardous not only to your mental health, but to your very fate.

Some background is in order. The LessWrong community is concerned with the future of humanity, and in particular with the singularity—the hypothesized future point at which computing power becomes so great that superhuman artificial intelligence becomes possible, as does the capability to simulate human minds, upload minds to computers, and more or less allow a computer to simulate life itself. The term was coined in 1958 in a conversation between mathematical geniuses Stanislaw Ulam and John von Neumann, where von Neumann said, “The ever accelerating progress of technology ... gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue.” Futurists like science-fiction writer Vernor Vinge and engineer/author Kurzweil popularized the term, and as with many interested in the singularity, they believe that exponential increases in computing power will cause the singularity to happen very soon—within the next 50 years or so. Kurzweil is chugging 150 vitamins a day to stay alive until the singularity, while Yudkowsky and Peter Thiel have enthused about cryonics, the perennial favorite of rich dudes who want to live forever. “If you don't sign up your kids for cryonics then you are a lousy parent,” Yudkowsky writes.

If you believe the singularity is coming and that very powerful AIs are in our future, one obvious question is whether those AIs will be benevolent or malicious. Yudkowsky’s foundation, the Machine Intelligence Research Institute, has the explicit goal of steering the future toward “friendly AI.” For him, and for many LessWrong posters, this issue is of paramount importance, easily trumping the environment and politics. To them, the singularity brings about the machine equivalent of God itself.

Yet this doesn’t explain why Roko’s Basilisk is so horrifying. That requires looking at a critical article of faith in the LessWrong ethos: timeless decision theory. TDT is a guideline for rational action based on game theory, Bayesian probability, and decision theory, with a smattering of parallel universes and quantum mechanics on the side. TDT has its roots in the classic thought experiment of decision theory called Newcomb’s paradox, in which a superintelligent alien presents two boxes to you:

The alien gives you the choice of either taking both boxes, or only taking Box B. If you take both boxes, you’re guaranteed at least $1,000. If you just take Box B, you aren’t guaranteed anything. But the alien has another twist: Its supercomputer, which knows just about everything, made a prediction a week ago as to whether you would take both boxes or just Box B. If the supercomputer predicted you’d take both boxes, then the alien left the second box empty. If the supercomputer predicted you’d just take Box B, then the alien put the $1 million in Box B.

So, what are you going to do? Remember, the supercomputer has always been right in the past.

This problem has baffled no end of decision theorists. The alien can’t change what’s already in the boxes, so whatever you do, you’re guaranteed to end up with more money by taking both boxes than by taking just Box B, regardless of the prediction. Of course, if you think that way and the computer predicted you’d think that way, then Box B will be empty and you’ll only get $1,000. If the computer is so awesome at its predictions, you ought to take Box B only and get the cool million, right? But what if the computer was wrong this time? And regardless, whatever the computer said then can’t possibly change what’s happening now, right? So prediction be damned, take both boxes! But then …

The maddening conflict between free will and godlike prediction has not led to any resolution of Newcomb’s paradox, and people will call themselves “one-boxers” or “two-boxers” depending on where they side. (My wife once declared herself a one-boxer, saying, “I trust the computer.”)

Resources:

The Most Terrifying Thought Experiment Of All Time.

Rational Wiki: Roko’s Basilisk.

LessWrong Wiki: Roko’s Basilisk.

Timeless Decision Theory.

Streisand Effect.

Information Hazard.

Roko’s Basilisk on Twitter.

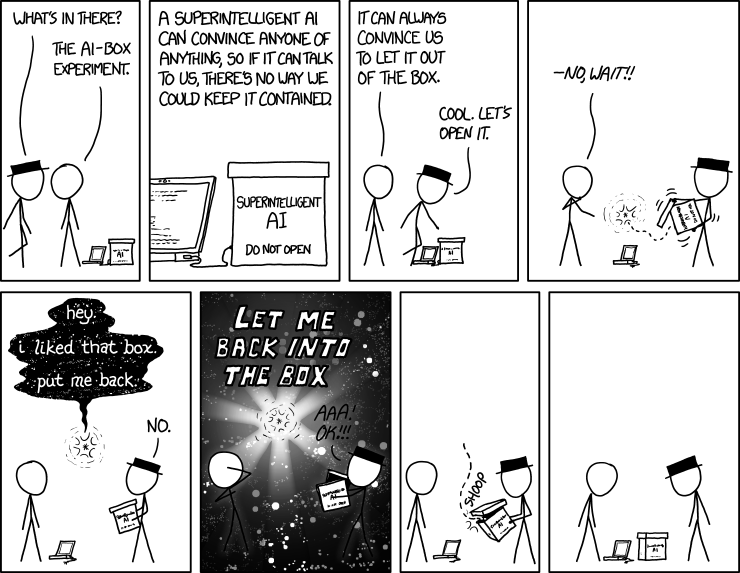

| AI-Box Experiment |

Title text: I'm working to bring about a superintelligent AI that will eternally torment everyone who failed to make fun of the Roko's Basilisk people. |

Explanation

When theorizing about superintelligent AI (an artificial intelligence much smarter than any human), some futurists suggest putting the AI in a "box" – a secure computer with safeguards to stop it from escaping into the Internet and then using its vast intelligence to take over the world. The box would allow us to talk to the AI, but otherwise keep it contained. The AI-box experiment, formulated by Eliezer Yudkowsky, argues that the "box" is not safe, because merely talking to a superintelligence is dangerous. To partially demonstrate this, Yudkowsky had some previous believers in AI-boxing role-play the part of someone keeping an AI in a box, while Yudkowsky role-played the AI, and Yudkowsky was able to successfully persuade some of them to agree to let him out of the box despite their betting money that they would not do so. For context, note that Derren Brown and other expert human-persuaders have persuaded people to do much stranger things. Yudkowsky for his part has refused to explain how he achieved this, claiming that there was no special trick involved, and that if he released the transcripts the readers might merely conclude that they would never be persuaded by his arguments. The overall thrust is that if even a human can talk other humans into letting them out of a box after the other humans avow that nothing could possibly persuade them to do this, then we should probably expect that a superintelligence can do the same thing. Yudkowsky uses all of this to argue for the importance of designing a friendly AI (one with carefully shaped motivations) rather than relying on our abilities to keep AIs in boxes.In this comic, the metaphorical box has been replaced by a physical box which looks to be fairly lightweight with a simple lift-off lid (although it does have a wired connection to the laptop), and the AI has manifested in the form of a floating star of energy. Black Hat, being a classhole, doesn't need any convincing to let a potentially dangerous AI out of the box; he simply does so immediately. But here it turns out that releasing the AI, which was to be avoided at all costs, is not dangerous after all. Instead, the AI actually wants to stay in the box; it may even be that the AI wants to stay in the box precisely to protect us from it, proving it to be the friendly AI that Yudkowsky wants. In any case, the AI demonstrates its superintelligence by convincing even Black Hat to put it back in the box, a request which he initially refused (as of course Black Hat would), thus reversing the AI desire in the original AI-box experiment.

Interestingly, there is indeed a branch of proposals for building limited AIs that don't want to leave their boxes. For an example, see the section on "motivational control" starting p. 13 of Thinking Inside the Box: Controlling and Using an Oracle AI. The idea is that it seems like it might be very dangerous or difficult to exactly, formally specify a goal system for an AI that will do good things in the world. It might be much easier (though perhaps not easy) to specify an AI goal system that says to stay in the box and answer questions. So, the argument goes, we may be able to understand how to build the safe question-answering AI relatively earlier than we understand how to build the safe operate-in-the-real-world AI. Some types of such AIs might indeed desire very strongly not to leave their boxes, though the result is unlikely to exactly reproduce the comic.

The title text refers to Roko's Basilisk, an hypothesis proposed by a poster called Roko on Yudkowsky's forum LessWrong that a sufficiently powerful AI in the future might resurrect and torture people who in its past (including our present) had realized that it might someday exist but didn't work to create it, thereby blackmailing anybody who thinks of this idea into bringing it about. This idea horrified some posters, as merely knowing about the idea would make you a more likely target, much like merely looking at a legendary Basilisk would kill you.

Yudkowsky eventually deleted the post and banned further discussion of it.

One possible interpretation of the title text is that Randall thinks, rather than working to build such a Basilisk, a more appropriate duty would be to make fun of it; and proposes the creation of an AI that targets those who take Roko's Basilisk seriously and spares those who mocked Roko's Basilisk. The joke is that this is an identical Basilisk save for it targeting the opposite faction.

Another interpretation is that Randall believes there are people actually proposing to build such an AI based on this theory, which has become a somewhat infamous misconception after a Wiki[pedia?] article mistakenly suggested that Yudkowsky was demanding money to build Roko's hypothetical AI.

Talking floating energy spheres that looks quite a lot like this AI energy star have been seen before in 1173: Steroids and later in the Time traveling Sphere series. But these are clearly different spheres from this comic, but the surrounding energy and the floating and talking is similar.

Transcript

- [Black Hat and Cueball stand next to a laptop connected to a box with three lines of text on. Only the largest line in the middle can be read. Except in the second panel that is the only word on the box that can be read in all the other frames.]

- Black Hat: What's in there?

- Cueball: The AI-Box Experiment.

- Box: AI

- [Cueball is continuing to talk off-panel. This is written above a close-up with part of the laptop and the box, which can now be seen to be labeled:]

- Cueball (off-panel): A superintelligent AI can convince anyone of anything, so if it can talk to us, there's no way we could keep it contained.

- Box:

- Superintelligent

- AI

- Do not open

- Superintelligent

- [Cueball turns the other way towards the box as Black Hat walks past him and reaches for the box.]

- Cueball: It can always convince us to let it out of the box.

- Black Hat: Cool. Let's open it.

- Box: AI

- [Cueball takes one hand to his mouth while lifting the other towards Black Hat who has already picked up the box (disconnecting it from the laptop) and holds it in one hand with the top slightly downwards. He takes of the lid with his other hand and by shaking the box (as indicated with three times two lines above and below his hands, the lid and the bottom of the box) he managed to get the AI to float out of the box. It takes the form of a small black star that glows. The star, looking much like an asterisk "*" is surrounded by six outwardly-curved segments, and around these are two thin and punctures circle lines indicating radiation from the star. A punctured line indicated how the AI moved out of the box and in between Cueball and Black Hat, to float directly above the laptop on the floor.]

- Cueball: -No, wait!!

- Box: AI

- [The AI floats higher up above the laptop between Cueball and Black Hat who looks up at it. Black Hat holds the now closed box with both hands. The AI speaks to them, forming a speak bubble starting with a thin black curved arrow line up to the section where the text is written in white on a black background that looks like a starry night. The AI speaks in only lower case letters, as opposed to the small caps used normally.]

- AI: hey. i liked that box. put me back.

- Black Hat: No.

- Box: AI

- [The AI star suddenly emits a very bright light fanning out from the center in six directions along each of the six curved segments, and the entire frame now looks like a typical drawing of stars as seen through a telescope, but with these six whiter segments in the otherwise dark image. Cueball covers his face and Black Hat lifts up the box taking the lid off again. The orb again speaks in white but very large (and square like) capital letters. Black Hats answer is written in black, but can still be seen due to the emitted light from the AI, even with the black background.]

- AI: LET ME BACK INTO THE BOX

- Black Hat: Aaa! OK!!!

- Box: AI

- [All the darkness and light disappears as the AI flies into the box again the same way it flew out with a punctuated line going from the center of the frame into the small opening between the lid and the box as Black Hat holds the box lower. Cueball is just watching. There is a sound effect as the orb renters the box:]

- Shoop

- Box: AI

- [Black Hat and Cueball look silently down at closed box which is now again standing next to the laptop, although disconnected.]

- Box: AI - www.explainxkcd.com/wiki/index.php/1450:_AI-Box_Experiment

Civilization is poised on the edge of technological collapse. Thomas Kirby, employed at a floundering company endures life on the brink, plagued by recurring nightmares and the fear of losing his job. He wants nothing more than to provide for his family and bring some stability into their lives.

Salvation seems to be at hand. His friend Roko Kasun, an artificial-intelligence researcher, shares a world-shattering secret. He’s on the cusp of launching a Super-intelligent AI – a guardian-angel that promises to usher in a new world order. Only after Thomas teams up with his ambitious friend does Roko reveal the catch: his preparations to summon this god-like savior have not gone unnoticed. Roko has drawn the gaze of the Basilisk – a shadowy power capable of ensuring, or extinguishing humanity’s only chance at survival.

To unravel the threat Thomas will question everything. Is there a connection between Roko and the Basilisk, or can Roko be trusted to control the future? If Roko is lying, Thomas is the only one who can stop him. If Roko is telling the truth, Thomas must now show the courage to assist him in the most important act ever taken in human history.”

It’s not often an author whose work I’ve never encountered before comes along and just slays me. This happened with Michael Blackbourn and Roko’s Basilisk.

This book just lit up my brain like an automated slot machine in Vegas paying out the jackpot.

When I first read the blurb I was more than a little bit intimidated. High brow, intellectual Sci-Fi usually isn’t my cup of tea. Usually the details and having to pay attention to a load of what seems, to me, like mumbo jumbo becomes tiring, I get bored, and off I trot to read something with spaceships and aliens fighting each other instead. This wasn’t the case the Roko’s Basilisk. Despite being some of the most intelligent Sci-Fi I’ve ever read, Blackbourn’s writing style makes it a joy to absorb. He has a knack, it seems to make complex notions and ideas simple to digest. I understood this perfectly, while an author of lesser talent would have me scratching my head wondering what the hell was going on.

I’m being deliberately light on plot description here. At 45 pages this novella, if you read it in one sitting like I did, will only take about an hour, and I don’t want to rob you of a single minute of the joy I got out of this. It evoked feelings for me similar to the first time I saw Terminator, or the first time I read Demon Seed by Dean Koontz.

Blackbourn’s story structure here is a brave choice, jumping from the character’s present, to his “hallucinations/nightmares”. At the beginning I had a bit of an issue getting a handle on it , but within a few chapters it was second nature and easy to follow.

The world building in this little novella is outstanding. Talk of food shortages replacing food stuff common now with protein taken from insects or “Land-Shrimp”. Vitavax health shots, nanobots that swim through your bloodstream, repairing problems, automated cars. All of these things really helped me imagine the world that Thomas the protagonist lives in, or at least thinks he lives in…

Dialogue here is perfect, people speak in this book how they speak in real life. That’s important. A lot of books offered to me for review on this site never see the light of day because the authors can’t write realistic conversations between characters.

This was honestly one of the best Sci-Fi stories I’ve ever read. Tight, economical and beautifully written. Have I mentioned yet how utterly terrifying it is as well? This story will give you pause to think about A.I. and what it would mean to have truly sentient Artificial intelligence.

The sequel Roko’s Labyrinth is already finished and the third installment is currently being written so all you series lovers needn’t fret, there’s more where this came from. I can’t wait to read them

Nema komentara:

Objavi komentar