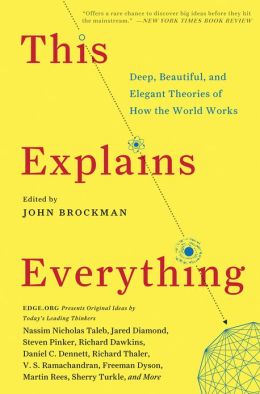

Još jedno od dubokih pitanja na koje suradnici portala za treću kulturu Edge odgovaraju još dubljim i elegantnijim mislima. Pitanje za 2012. godinu bilo je: Koje je vaše najdraže, elegantno ili lijepo objašnjenje [svega]?

Mr. Brockman, the editor and publisher of Edge.org, asked the thinkers in his online science community to share their favorite “deep, elegant or beautiful” explanation. What sounds like a canned conversation starter at a dinner party of geeks ends up yielding a handy collection of 150 shortcuts to understanding how the world works. Elegance in this context means, as the evolutionary biologist Richard Dawkins writes, the “power to explain much while assuming little.” While some offerings can be incomprehensible (including the one entitled “Why Is Our World Comprehensible?”), others keep it simpler. Marcel Kinsbourne, a professor of psychology at the New School, describes how good ideas surface. Philip Campbell, editor in chief of Nature, tackles the beauty of sunrises. Among the things this book will teach you? How much you don’t know. - SUSANNAH MEADOWS

Scientists' greatest pleasure comes from theories that derive the solution to some deep puzzle from a small set of simple principles in a surprising way. These explanations are called "beautiful" or "elegant". Historical examples are Kepler's explanation of complex planetary motions as simple ellipses, Bohr's explanation of the periodic table of the elements in terms of electron shells, and Watson and Crick's double helix. Einstein famously said that he did not need experimental confirmation of his general theory of relativity because it "was so beautiful it had to be true."

WHAT IS YOUR FAVORITE DEEP, ELEGANT, OR BEAUTIFUL EXPLANATION?

Since this question is about explanation, answers may embrace scientific thinking in the broadest sense: as the most reliable way of gaining knowledge about anything, including other fields of inquiry such as philosophy, mathematics, economics, history, political theory, literary theory, or the human spirit. The only requirement is that some simple and non-obvious idea explain some diverse and complicated set of phenomena.

192 CONTRIBUTORS (128,500 words): Emanuel Derman, Nicholas Humphrey, Dylan Evans, Howard Gardner, Jeremy Berstein, Rudy Rucker, Michael Shermer, Nicholas Carr, Susam Blackmore, Scott Atran, David Christian, Andy Clark, Donald Hoffman, Derek Lowe, Roger Schank, Arnold Trehub, Timothy Taylor, Cliff Pickover, Ed Regis, Jared Diamond, Robert Provine, Richard Nisbett, Peter Woit, Haim Harari, Satyajit Das, Juan Enriquez, Jamshed Bharucha, Richard Foreman, Scott D. Sampson, Jonathan Gotschall, Keith Devlin, Clay Shirky, Steven Pinker, Gloria Origgi, Sean Carroll, Irene Pepperberg, Tor Nørretranders, Alan Alda, Jennifer Jacquet, George Dyson, Nigel Goldenfeld, Aubrey De Grey, Nassim Nicholas Taleb, George Church, Kevin Kelly, Stephen M. Kosslyn and Robin S. Rosenberg, Lawrence M. Krauss, James Croak, Armand Marie Leroi, Leonard Susskind, Douglas Rushkoff, Victoria Stodden, Daniel C. Dennett, Shing-tung Yau, Philip Campbell, Freeman Dyson, Mihaly Csikszentmihalyi, Martin Rees, Stanislas Dehaene, Samuel Arbesman, David Gelernter, Timothy D. Wilson, Judith Rich Harris, Samuel Barondes, Peter Atkins, Robert Kurzban, Todd C. Sacktor, Gerald Holton, Frank Wilczek, Elizabeth Dunn, Eric J. Topol, Lee Smolin, Roger Highfield, Michael I. Norton, Richard Dawkins, Neil Gershenfeld, Alison Gopnik, Terrence J. Sejnowski, Rodney Brooks, Philip Zimbardo, Christopher Sykes, Nicholas A. Christakis, Marcel Kinsbourne, Thomas A. Bass, Randolph Nesse, Sherry Turkle, Gino Segre, Eric R. Kandel, Hugo Mercier, Beatrice Golomb, Benjamin Bergen, Alun Anderson, Alvy Ray Smith, Katinka Matson, Steve Giddings, Hans Ulrich Obrist, Gerd Gigerenzer , Gerald Smallberg, Paul Steinhardt, Adam Alter, Karl Sabbagh, David G. Myers, Lica DiBiase, Stuart Pimm, James J. O'Donnell, Albert-László Barabási, Simon Baron-Cohen, Charles Seife, Patrick Bateson, Carlo Rovelli, Jordan Pollack, Robert Sapolsky, Frank Tipler, Bruce Parker, Marcelo Gleiser, Richard Saul Wurman, Gary Klein, Ernst Pöppel, Evgeny Morozov, Gregory Benford, S. Abbas Raza, Rebecca Newberger Goldstein, Thomas Metzinger, David Haig, Melanie Swan, Laurence C. Smith, John C. Mather, Seth Lloyd, P. Murali Doriaswamy, Marti Hearst, Jon Kleinberg, Kai Krause, Joel Gold, Simone Schnall, Paul Saffo, Lisa Randall, Brian Eno, Giulio Boccaletti, Paul Bloom, Timo Hannay, Anthony Grayling, Matt Ridley, Doug Coupland, Amanda Gefter, Bruce Hood, Gregory Paul, Stephon Alexander, Bart Kosko, John Tooby, Stuart Kauffman, Barry C. Smith, John Naughton, Helen Fisher, Virginia Heffernan, Dimitar Sasselov, Eric Weinstein, Max Tegmark, PZ Myers, Andrew Lih, Christine Finn, Gregory Cochran, John McWhorter, Michael Vassar, Brian Knutson, Eduardo Salcedo-Albaran, Antony Garrett Lisi, Helena Cronin, Tania Lombrozo, Kevin Hand, Seirian Sumner, David Eagleman, Tim O'Reilly, Marco Iacoboni, Raphael Bousso, David Dalrymple, Emily Pronin, Dave Winer, Alanna Conner & Hazel Rose Markus, David Pizarro, Andrian Kreye, David Buss, Carl Zimmer, Stewart Brand, Anton Zeilinger, Carolyn Porco, Dan Sperber, Mahzarin Banaji, V.S. Ramachandran, Nathan Myhrvold, Charles Simonyi, Richard Thaler, Andrei Linde

Sleep Is the Interest We Have to Pay on the Capital Which is Called In at Death

By focusing on the common periodic nature of sleep and interest payments, Schopenhauer extends the metaphor of borrowing to life itself. Life and consciousness are the principal, death is the final repayment, and sleep is la petite mort, the periodic little death that renews.

Sleep is the interest we have to pay on the capital which is called in at death; and the higher the rate

of interest and the more regularly it is paid, the further the date of redemption is postponed.

So wrote Arthur Schopenhauer, comparing life to finance in a universe

that must keep its books balanced. At birth you receive a loan,

consciousness and light borrowed from the void, leaving a hole in the

emptiness. The hole will grow bigger each day. Nightly, by yielding

temporarily to the darkness of sleep, you restore some of the emptiness

and keep the hole from growing limitlessly. In the end you must pay back

the principal, complete the void, and return the life originally lent

you.of interest and the more regularly it is paid, the further the date of redemption is postponed.

By focusing on the common periodic nature of sleep and interest payments, Schopenhauer extends the metaphor of borrowing to life itself. Life and consciousness are the principal, death is the final repayment, and sleep is la petite mort, the periodic little death that renews.

The Scientific Method—An Explanation For Explanations

Humans are a story telling species. Throughout history we have told stories to each other and ourselves as one of the ways to understand the world around us. Every culture has its creation myth for how the universe came to be, but the stories do not stop at the big picture view; other stories discuss every aspect of the world around us. We humans are chatterboxes and we just can't resist telling a story about just about everything.

However compelling and entertaining these stories may be, they fall short of being explanations because in the end all they are is stories. For every story you can tell a different variation, or a different ending, without giving reason to choose between them. If you are skeptical or try to test the veracity of these stories you'll typically find most such stories wanting. One approach to this is forbid skeptical inquiry, branding it as heresy. This meme is so compelling that it was independently developed by cultures around the globes; it is the origin of religion—a set of stories about the world that must be accepted on faith, and never questioned.

Somewhere along the line a very different meme got started. Instead of forbidding inquiry into stories about the world people tried the other extreme of encouraging continual questioning. Stories about aspect of the world can be questioned skeptically, and tested with observations and experiments. If the story survives the tests then provisionally at least one can accept it as something more than a mere story; it is a theory that has real explanatory power. It will never be more than a provisional explanation—we can never let down our skeptical guard—but these provisional explanations can be very useful. We call this process of making and vetting stories the scientific method.

For me, the scientific method is the ultimate elegant explanation. Indeed it is the ultimate foundation for anything worthy of the name "explanation". It makes no sense to talk about explanations without having a process for deciding which are right and which are wrong, and in a broad sense that is what the scientific method is about. All of the other wonderful explanations celebrated here owe their origin and credibility to the process by which they are verified—the scientific method.

This seems quite obvious to us now, but it took many thousands of years for people to develop the scientific method to a point where they could use it to build useful theories about the world. It was not, a priori, obvious that such a method would work. At one extreme, creation myths discuss the origin of the universe, and for thousands of years one could take the position that this will never be more than a story—how can humans ever figure out something that complicated and distant in space and time? It would be a bold bet to say that people reasoning with the scientific method could solve that puzzle.

Well, it has taken us a while but by now enormous amounts are known about the composition of stars and galaxies and how the universe came to be. There are still gaps in our knowledge (and our skepticism will never stop), but we've made a lot of progress on cosmology and many other problems. Indeed we know more about the composition of distant stars than many questions about things here on earth. The scientific method has not conquered all great questions - other issues remain illusive, but the spirit of the scientific method is that one does shrink from the unknown. It is OK to say that we have no useful story for everything we are curious about, and we comfort ourselves that at some point in the future new explanations will fill the gaps in our current knowledge, as often raise new questions that highlight new gaps.

It's hard to overestimate the importance of the scientific method. Human culture contains much more than science—but science is the part that actually works—the rest is just stories. The rationally based inquiry the scientific method enables is what has given us science and technology and vastly different lifestyles than those of our hunter-gatherers ancestors. In some sense it is analogous to evolution. The sum of millions of small mutations separate us from single celled like blue-green algae. Each had to survive the test of selection and work better than the previous state in the sense of biological fitness. Human knowledge is the accumulation of millions of stories-that-work, each of which had to survive the test of the scientific method, matching observation and experiment more than the predecessors. Both evolution and science have taken us a long way, but looking forward it is clear that science will take us much farther.

Humans are a story telling species. Throughout history we have told stories to each other and ourselves as one of the ways to understand the world around us. Every culture has its creation myth for how the universe came to be, but the stories do not stop at the big picture view; other stories discuss every aspect of the world around us. We humans are chatterboxes and we just can't resist telling a story about just about everything.

However compelling and entertaining these stories may be, they fall short of being explanations because in the end all they are is stories. For every story you can tell a different variation, or a different ending, without giving reason to choose between them. If you are skeptical or try to test the veracity of these stories you'll typically find most such stories wanting. One approach to this is forbid skeptical inquiry, branding it as heresy. This meme is so compelling that it was independently developed by cultures around the globes; it is the origin of religion—a set of stories about the world that must be accepted on faith, and never questioned.

Somewhere along the line a very different meme got started. Instead of forbidding inquiry into stories about the world people tried the other extreme of encouraging continual questioning. Stories about aspect of the world can be questioned skeptically, and tested with observations and experiments. If the story survives the tests then provisionally at least one can accept it as something more than a mere story; it is a theory that has real explanatory power. It will never be more than a provisional explanation—we can never let down our skeptical guard—but these provisional explanations can be very useful. We call this process of making and vetting stories the scientific method.

For me, the scientific method is the ultimate elegant explanation. Indeed it is the ultimate foundation for anything worthy of the name "explanation". It makes no sense to talk about explanations without having a process for deciding which are right and which are wrong, and in a broad sense that is what the scientific method is about. All of the other wonderful explanations celebrated here owe their origin and credibility to the process by which they are verified—the scientific method.

This seems quite obvious to us now, but it took many thousands of years for people to develop the scientific method to a point where they could use it to build useful theories about the world. It was not, a priori, obvious that such a method would work. At one extreme, creation myths discuss the origin of the universe, and for thousands of years one could take the position that this will never be more than a story—how can humans ever figure out something that complicated and distant in space and time? It would be a bold bet to say that people reasoning with the scientific method could solve that puzzle.

Well, it has taken us a while but by now enormous amounts are known about the composition of stars and galaxies and how the universe came to be. There are still gaps in our knowledge (and our skepticism will never stop), but we've made a lot of progress on cosmology and many other problems. Indeed we know more about the composition of distant stars than many questions about things here on earth. The scientific method has not conquered all great questions - other issues remain illusive, but the spirit of the scientific method is that one does shrink from the unknown. It is OK to say that we have no useful story for everything we are curious about, and we comfort ourselves that at some point in the future new explanations will fill the gaps in our current knowledge, as often raise new questions that highlight new gaps.

It's hard to overestimate the importance of the scientific method. Human culture contains much more than science—but science is the part that actually works—the rest is just stories. The rationally based inquiry the scientific method enables is what has given us science and technology and vastly different lifestyles than those of our hunter-gatherers ancestors. In some sense it is analogous to evolution. The sum of millions of small mutations separate us from single celled like blue-green algae. Each had to survive the test of selection and work better than the previous state in the sense of biological fitness. Human knowledge is the accumulation of millions of stories-that-work, each of which had to survive the test of the scientific method, matching observation and experiment more than the predecessors. Both evolution and science have taken us a long way, but looking forward it is clear that science will take us much farther.

Boscovich's Explanation Of Atomic Forces

A great example how a great deal of amazing insight can be gained from some very simple considerations is the explanation of atomic forces by the 18th century Jesuit polymath Roger Boscovich, who was born in Dubrovnik.

One of the great philosophical arguments at the time took place between the adherents of Descartes who—following Aristotle—thought that forces can only be the result of immediate contact and those who followed Newton and believed in his concept of force acting at a distance. Newton was the revolutionary here, but his opponents argued—with some justification—that "action at a distance" brought back into physics "occult" explanations that do not follow from the "clear and distinct" understanding that Descartes demanded. (In the following I am paraphrasing reference works.) Boscovich, a forceful advocate of the Newtonian point of view, turned the question around: Let's understand exactly what happens during the interaction that we would call immediate contact?

His arguments are very easy to understand and extremely convincing. Let's imagine two bodies, one of which is traveling at a speed of, say, 6 units, the other at a speed of 12 with the faster body catching up with the slower one along the same straight path. We imagine what transpires when the two bodies collide. By conservation of the "quantity of motion," both bodies should continue after collision along the same path, each with a speed of 9 units (in the case of inelastic collision, or in case of elastic collision for a brief period right after the collision)

But how did the velocity of the faster body come to be reduced from 12 to 9, and that of the slower body increased from 6 to 9? Clearly, the time interval for the change in velocities cannot be zero, for then, argued Boscovich, the instantaneous change in speed would violate the law of continuity. Furthermore, we would have to say that at the moment of impact, the speed of one body is simultaneously 12 and 9, which is patently absurd.

It is therefore necessary for the change in speed to take place in a small, yet finite, amount of time. But with this assumption, we arrive at yet another contradiction. Suppose, for example, that after a small interval of time, the speed of the faster body is 11, and that of the slower body is 7. But this would mean that they are not moving at the same velocity, and the front surface of the faster body would advance through the rear surface of the slower body, which is impossible because we have assumed that the bodies are impenetrable. It therefore becomes apparent that the interaction must take place immediately before the impact of the two bodies and that this interaction can only be a repulsive one because it is expressed in the slowing down of one body and the speeding up of the other.

Moreover, this argument is valid for arbitrary speeds, so one can no longer speak of definite dimensions for the particles that were until now thought of as impenetrable, namely, for the atoms. An atom should rather be viewed as a point source of force, with the force emanating from it acting in some complicated fashion that depends on distance.

According to Boscovich, when bodies are far apart, they act on each other through a force corresponding to the gravitational force, which is inversely proportional to the square of the distance. But with decreasing distance, this law must be modified because, in accordance with the above considerations, the force changes sign and must become a repulsive force. Boscovich even plotted fanciful traces of how the force should vary with distance in which the force changed sign several times, hinting to the existence of minima in the potential and the existence of stable bonds between the particles—the atoms.

With this idea Boscovich not only offered a new picture for interactions in place of the Aristotelian-Cartesian theory based on immediate contact, but also presaged our understanding of the structure of matter, especially that of solid bodies.

A great example how a great deal of amazing insight can be gained from some very simple considerations is the explanation of atomic forces by the 18th century Jesuit polymath Roger Boscovich, who was born in Dubrovnik.

One of the great philosophical arguments at the time took place between the adherents of Descartes who—following Aristotle—thought that forces can only be the result of immediate contact and those who followed Newton and believed in his concept of force acting at a distance. Newton was the revolutionary here, but his opponents argued—with some justification—that "action at a distance" brought back into physics "occult" explanations that do not follow from the "clear and distinct" understanding that Descartes demanded. (In the following I am paraphrasing reference works.) Boscovich, a forceful advocate of the Newtonian point of view, turned the question around: Let's understand exactly what happens during the interaction that we would call immediate contact?

His arguments are very easy to understand and extremely convincing. Let's imagine two bodies, one of which is traveling at a speed of, say, 6 units, the other at a speed of 12 with the faster body catching up with the slower one along the same straight path. We imagine what transpires when the two bodies collide. By conservation of the "quantity of motion," both bodies should continue after collision along the same path, each with a speed of 9 units (in the case of inelastic collision, or in case of elastic collision for a brief period right after the collision)

But how did the velocity of the faster body come to be reduced from 12 to 9, and that of the slower body increased from 6 to 9? Clearly, the time interval for the change in velocities cannot be zero, for then, argued Boscovich, the instantaneous change in speed would violate the law of continuity. Furthermore, we would have to say that at the moment of impact, the speed of one body is simultaneously 12 and 9, which is patently absurd.

It is therefore necessary for the change in speed to take place in a small, yet finite, amount of time. But with this assumption, we arrive at yet another contradiction. Suppose, for example, that after a small interval of time, the speed of the faster body is 11, and that of the slower body is 7. But this would mean that they are not moving at the same velocity, and the front surface of the faster body would advance through the rear surface of the slower body, which is impossible because we have assumed that the bodies are impenetrable. It therefore becomes apparent that the interaction must take place immediately before the impact of the two bodies and that this interaction can only be a repulsive one because it is expressed in the slowing down of one body and the speeding up of the other.

Moreover, this argument is valid for arbitrary speeds, so one can no longer speak of definite dimensions for the particles that were until now thought of as impenetrable, namely, for the atoms. An atom should rather be viewed as a point source of force, with the force emanating from it acting in some complicated fashion that depends on distance.

According to Boscovich, when bodies are far apart, they act on each other through a force corresponding to the gravitational force, which is inversely proportional to the square of the distance. But with decreasing distance, this law must be modified because, in accordance with the above considerations, the force changes sign and must become a repulsive force. Boscovich even plotted fanciful traces of how the force should vary with distance in which the force changed sign several times, hinting to the existence of minima in the potential and the existence of stable bonds between the particles—the atoms.

With this idea Boscovich not only offered a new picture for interactions in place of the Aristotelian-Cartesian theory based on immediate contact, but also presaged our understanding of the structure of matter, especially that of solid bodies.

Commitment

It is a fundamental principle of economics that a person is always better off if they have more alternatives to choose from. But this principle is wrong. There are cases when I can make myself better off by restricting my future choices and commit myself to a specific course of action.

The idea of commitment as a strategy is an ancient one. Odysseus famously had his crew tie him to the mast so he could listen to the Sirens' songs without falling into the temptation to steer the ship into the rocks. And he committed his crew to not listening by filling their ears with wax. Another classic is Cortés's decision to burn his ships upon arriving in Mexico, thus removing retreat as one of the options his crew could consider. But although the idea is an old one, we did not begin to understand its nuances until Nobel Laureate Thomas Schelling's wrote his 1956 masterpiece: "An Essay on Bargaining".

It is well known that thorny games such as the prisoner's dilemma can be solved if both players can credibly commit themselves to cooperating, but how can I convince you that I will cooperate when it is a dominant strategy for me to defect? (And, if you and I are game theorists, you know that I know that you know that I know that defecting is a dominant strategy.)

Schelling gives many examples of how this can be done, but here is my favorite. A Denver rehabilitation clinic whose clientele consisted of wealthy cocaine addicts, offered a "self-blackmail" strategy. Patient were offered an opportunity to write a self- incriminating letter that would be delivered if and only if the patient, who is tested on a random schedule, is found to have used cocaine. Most cocaine addicts will probably have no trouble thinking of something to write about, and will now have a very strong incentive to stay off drugs. They are committed.

Many of society's thorniest problems, from climate change to Middle East peace could be solved if the relevant parties could only find a way to commit themselves to some future course of action. They would be well advised to study Tom Schelling in order to figure out how to make that commitment.

It is a fundamental principle of economics that a person is always better off if they have more alternatives to choose from. But this principle is wrong. There are cases when I can make myself better off by restricting my future choices and commit myself to a specific course of action.

The idea of commitment as a strategy is an ancient one. Odysseus famously had his crew tie him to the mast so he could listen to the Sirens' songs without falling into the temptation to steer the ship into the rocks. And he committed his crew to not listening by filling their ears with wax. Another classic is Cortés's decision to burn his ships upon arriving in Mexico, thus removing retreat as one of the options his crew could consider. But although the idea is an old one, we did not begin to understand its nuances until Nobel Laureate Thomas Schelling's wrote his 1956 masterpiece: "An Essay on Bargaining".

It is well known that thorny games such as the prisoner's dilemma can be solved if both players can credibly commit themselves to cooperating, but how can I convince you that I will cooperate when it is a dominant strategy for me to defect? (And, if you and I are game theorists, you know that I know that you know that I know that defecting is a dominant strategy.)

Schelling gives many examples of how this can be done, but here is my favorite. A Denver rehabilitation clinic whose clientele consisted of wealthy cocaine addicts, offered a "self-blackmail" strategy. Patient were offered an opportunity to write a self- incriminating letter that would be delivered if and only if the patient, who is tested on a random schedule, is found to have used cocaine. Most cocaine addicts will probably have no trouble thinking of something to write about, and will now have a very strong incentive to stay off drugs. They are committed.

Many of society's thorniest problems, from climate change to Middle East peace could be solved if the relevant parties could only find a way to commit themselves to some future course of action. They would be well advised to study Tom Schelling in order to figure out how to make that commitment.

Why Is Our World Comprehensible?

"The most incomprehensible thing about the world is that it is comprehensible." This is one of the most famous quotes from Albert Einstein. "The fact that it is comprehensible is a miracle." Similarly, Eugene Wigner said that the unreasonable efficiency of mathematics is "a wonderful gift which we neither understand nor deserve." Thus we have a problem that may seem too metaphysical to be addressed in a meaningful way: Why do we live in a comprehensible universe with certain rules, which can be efficiently used for predicting our future?

One could always respond that God created the universe and made it simple enough so that we can comprehend it. This would match the words about a miracle and an undeserved gift. But shall we give up so easily? Let us consider several other questions of a similar type. Why is our universe so large? Why parallel lines do not intersect? Why different parts of the universe look so similar? For a long time such questions looked too metaphysical to be considered seriously. Now we know that inflationary cosmology provides a possible answer to all of these questions. Let us see whether it might help us again.

To understand the issue, consider some examples of an incomprehensible universe where mathematics would be inefficient. Here is the first one: Suppose the universe is in a state with the Planck density r ~ 1094 g/cm3. Quantum fluctuations of space-time in this regime are so large that all rulers are rapidly bending and shrinking in an unpredictable way. This happens faster than one could measure distance. All clocks are destroyed faster than one could measure time. All records about the previous events become erased, so one cannot remember anything and predict the future. The universe is incomprehensible for anybody living there, and the laws of mathematics cannot be efficiently used.

If the huge density example looks a bit extreme, rest assured that it is not. There are three basic types of universes: closed, open and flat. A typical closed universe created in the hot Big Bang would collapse in about 10-43 seconds, in a state with the Planck density. A typical open universe would grow so fast that formation of galaxies would be impossible, and our body would be instantly torn apart. Nobody would be able to live and comprehend the universe in either of these two cases. We can enjoy life in a flat or nearly flat universe, but this requires fine-tuning of initial conditions at the moment of the Big Bang with an incredible accuracy of about 10-60.

Recent developments in string theory, which is the most popular (though extremely complicated) candidate for the role of the theory of everything, reveal an even broader spectrum of possible but incomprehensible universes. According to the latest developments in string theory, we may have about 10500 (or more) choices of the possible state of the world surrounding us. All of these choices follow from the same string theory. However, the universes corresponding to each of these choices would look as if they were governed by different laws of physics; their common roots would be well hidden. Since there are so many different choices, some of them may describe the universe we live in. But most of these choices would lead to a universe where we would be unable to live and efficiently use mathematics and physics to predict the future.

At the time when Einstein and Wigner were trying to understand why our universe is comprehensible, everybody assumed that the universe is uniform and the laws of physics are the same everywhere. In this context, recent developments would only sharpen the formulation of the problem: We must be incredibly lucky to live in the universe where life is possible and the universe is comprehensible. This would indeed look like a miracle, like a "gift that we neither understand nor deserve." Can we do anything better than praying for a miracle?

During the last 30 years the way we think about our world changed profoundly. We found that inflation, the stage of an exponentially rapid expansion of the early universe, makes our part of the universe flat and extremely homogeneous. However, simultaneously with explaining why the observable part of the universe is so uniform, we also found that on a very large scale, well beyond the present visibility horizon of about 1010 light years, the universe becomes 100% non-uniform due to quantum effects amplified by inflation.

This means that instead of looking like an expanding spherically symmetric ball, our world looks like a multiverse, a collection of an incredibly large number of exponentially large bubbles. For (almost) all practical purposes, each of these bubbles looks like a separate universe. Different laws of the low energy physics operate inside each of these universes.

In some of these universes, quantum fluctuations are so large that any computations are impossible. Mathematics there is inefficient because predictions cannot be memorized and used. Lifetime of some of these universes is too short. Some other universes are long living but laws of physics there do not allow existence of anybody who could live sufficiently long to learn physics and mathematics.

Fortunately, among all possible parts of the multiverse there should be some exponentially large parts where we may live. But our life is possible only if the laws of physics operating in our part of the multiverse allow formation of stable, long-living structures capable of making computations. This implies existence of stable (mathematical) relations that can be used for long-term predictions. Rapid development of the human race was possible only because we live in the part of the multiverse where the long-term predictions are so useful and efficient that they allow us to survive in the hostile environment and win in the competition with other species.

To summarize, the inflationary multiverse consists of myriads of 'universes' with all possible laws of physics and mathematics operating in each of them. We can only live in those universes where the laws of physics allow our existence, which requires making reliable predictions. In other words, mathematicians and physicists can only live in those universes which are comprehensible and where the laws of mathematics are efficient.

One can easily dismiss everything that I just said as a wild speculation. It seems very intriguing, however, that in the context of the new cosmological paradigm, which was developed during the last 30 years, we might be able, for the first time, to approach one of the most complicated and mysterious problems which bothered some of the best scientists of the 20th century.

"The most incomprehensible thing about the world is that it is comprehensible." This is one of the most famous quotes from Albert Einstein. "The fact that it is comprehensible is a miracle." Similarly, Eugene Wigner said that the unreasonable efficiency of mathematics is "a wonderful gift which we neither understand nor deserve." Thus we have a problem that may seem too metaphysical to be addressed in a meaningful way: Why do we live in a comprehensible universe with certain rules, which can be efficiently used for predicting our future?

One could always respond that God created the universe and made it simple enough so that we can comprehend it. This would match the words about a miracle and an undeserved gift. But shall we give up so easily? Let us consider several other questions of a similar type. Why is our universe so large? Why parallel lines do not intersect? Why different parts of the universe look so similar? For a long time such questions looked too metaphysical to be considered seriously. Now we know that inflationary cosmology provides a possible answer to all of these questions. Let us see whether it might help us again.

To understand the issue, consider some examples of an incomprehensible universe where mathematics would be inefficient. Here is the first one: Suppose the universe is in a state with the Planck density r ~ 1094 g/cm3. Quantum fluctuations of space-time in this regime are so large that all rulers are rapidly bending and shrinking in an unpredictable way. This happens faster than one could measure distance. All clocks are destroyed faster than one could measure time. All records about the previous events become erased, so one cannot remember anything and predict the future. The universe is incomprehensible for anybody living there, and the laws of mathematics cannot be efficiently used.

If the huge density example looks a bit extreme, rest assured that it is not. There are three basic types of universes: closed, open and flat. A typical closed universe created in the hot Big Bang would collapse in about 10-43 seconds, in a state with the Planck density. A typical open universe would grow so fast that formation of galaxies would be impossible, and our body would be instantly torn apart. Nobody would be able to live and comprehend the universe in either of these two cases. We can enjoy life in a flat or nearly flat universe, but this requires fine-tuning of initial conditions at the moment of the Big Bang with an incredible accuracy of about 10-60.

Recent developments in string theory, which is the most popular (though extremely complicated) candidate for the role of the theory of everything, reveal an even broader spectrum of possible but incomprehensible universes. According to the latest developments in string theory, we may have about 10500 (or more) choices of the possible state of the world surrounding us. All of these choices follow from the same string theory. However, the universes corresponding to each of these choices would look as if they were governed by different laws of physics; their common roots would be well hidden. Since there are so many different choices, some of them may describe the universe we live in. But most of these choices would lead to a universe where we would be unable to live and efficiently use mathematics and physics to predict the future.

At the time when Einstein and Wigner were trying to understand why our universe is comprehensible, everybody assumed that the universe is uniform and the laws of physics are the same everywhere. In this context, recent developments would only sharpen the formulation of the problem: We must be incredibly lucky to live in the universe where life is possible and the universe is comprehensible. This would indeed look like a miracle, like a "gift that we neither understand nor deserve." Can we do anything better than praying for a miracle?

During the last 30 years the way we think about our world changed profoundly. We found that inflation, the stage of an exponentially rapid expansion of the early universe, makes our part of the universe flat and extremely homogeneous. However, simultaneously with explaining why the observable part of the universe is so uniform, we also found that on a very large scale, well beyond the present visibility horizon of about 1010 light years, the universe becomes 100% non-uniform due to quantum effects amplified by inflation.

This means that instead of looking like an expanding spherically symmetric ball, our world looks like a multiverse, a collection of an incredibly large number of exponentially large bubbles. For (almost) all practical purposes, each of these bubbles looks like a separate universe. Different laws of the low energy physics operate inside each of these universes.

In some of these universes, quantum fluctuations are so large that any computations are impossible. Mathematics there is inefficient because predictions cannot be memorized and used. Lifetime of some of these universes is too short. Some other universes are long living but laws of physics there do not allow existence of anybody who could live sufficiently long to learn physics and mathematics.

Fortunately, among all possible parts of the multiverse there should be some exponentially large parts where we may live. But our life is possible only if the laws of physics operating in our part of the multiverse allow formation of stable, long-living structures capable of making computations. This implies existence of stable (mathematical) relations that can be used for long-term predictions. Rapid development of the human race was possible only because we live in the part of the multiverse where the long-term predictions are so useful and efficient that they allow us to survive in the hostile environment and win in the competition with other species.

To summarize, the inflationary multiverse consists of myriads of 'universes' with all possible laws of physics and mathematics operating in each of them. We can only live in those universes where the laws of physics allow our existence, which requires making reliable predictions. In other words, mathematicians and physicists can only live in those universes which are comprehensible and where the laws of mathematics are efficient.

One can easily dismiss everything that I just said as a wild speculation. It seems very intriguing, however, that in the context of the new cosmological paradigm, which was developed during the last 30 years, we might be able, for the first time, to approach one of the most complicated and mysterious problems which bothered some of the best scientists of the 20th century.

Genes , Claustrum, and Consciousness

What's my favorite elegant idea? The elucidation of DNA's structure is surely the most obvious, but it bears repeating. I'll argue that the same strategy used to crack the genetic code might prove successful in cracking the "neural code" of consciousness and self. It's a long shot, but worth considering.

The ability to grasp analogies, and seeing the difference between deep and superficial ones, is a hallmark of many great scientists; Francis Crick and James Watson were no exception. Crick himself cautioned against the pursuit of elegance in biology, given that evolution proceeds happenstantially—"God is a hacker," he famously said, adding (according to my colleague Don Hoffman), "Many a young biologist has slit his own throat with Ockham's razor." Yet his own solution to the riddle of heredity ranks with natural selection as biology's most elegant discovery. Will a solution of similar elegance emerge for the problem of consciousness?

It is well known that Crick and Watson unraveled the double helical structure of the DNA molecule: two twisting complementary strands of nucleotides. Less well known is the chain of events culminating in this discovery.

First, Mendel's laws dictated that genes are particulate (a first approximation still held to be accurate). Then Thomas Morgan showed that fruit flies zapped with x-rays became mutants with punctate changes in their chromosomes, yielding the clear conclusion that the chromosomes are where the action is. Chromosomes are composed of histones and DNA; as early as 1928, the British bacteriologist Fred Griffith showed that a harmless species of bacterium, upon incubation with a heat-killed virulent species, actually changes into the virulent species! This was almost as startling as a pig walking into a room with a sheep and two sheep emerging. Later, Oswald Avery showed that DNA was the transformative principle here. In biology, knowledge of structure often leads to knowledge of function—one need look no further than the whole of medical history. Inspired by Griffith and Avery, Crick and Watson realized that the answer to the problem of heredity lay in the structure of DNA. Localization was critical, as, indeed, it may prove to be for brain function.

Crick and Watson didn't just describe DNA's structure, they explained its significance. They saw the analogy between the complementarity of molecular strands and the complementarity of parent and offspring—why pigs beget pigs and not sheep. At that moment modern biology was born.

I believe there are similar correlations between brain structure and mind function, between neurons and consciousness. I am stating the obvious here only because there are some philosophers, called "new mysterians," who believe the opposite. The erudite Colin McGinn has written, for instance, "The brain is only tangentially relevant to consciousness." ( There are many philosophers who would disagree, e.g. Churchland, Dennett, and Searle.)

After his triumph with heredity, Crick turned to what he called the "second great riddle" in biology—consciousness. There were many skeptics. I remember a seminar Crick was giving on consciousness at the Salk Institute here in La Jolla. He'd barely started when a gentleman in attendance raised a hand and said, "But Doctor Crick, you haven't even bothered to define the word consciousness before embarking on this." Crick's response was memorable: "I'd remind you that there was never a time in the history of biology when a bunch of us sat around the table and said, 'Let's first define what we mean by life.' We just went out there and discovered what it was—a double helix. We leave matters of semantic hygiene to you philosophers."

Crick did not, in my opinion, succeed in solving consciousness (whatever that might mean). Nonetheless, I believe he was headed in the right direction. He had been richly rewarded earlier in his career for grasping the analogy between biological complementarities, the notion that the structural logic of the molecule dictates the functional logic of heredity. Given his phenomenal success using the strategy of structure-function analogy, it is hardly surprising that he imported the same style of thinking to study consciousness. He and his colleague Christoff Koch did so by focusing on a relatively obscure structure called the claustrum.

The claustrum is a thin sheet of cells underlying the insular cortex of the brain, one on each hemisphere. It is histologically more homogeneous than most brain structures, and intriguingly, unlike most brain structures (which send and receive signals to and from a small subset of other structures), the claustrum is reciprocally connected with almost every cortical region. The structural and functional streamlining might ensure that, when waves of information come through the claustrum, its neurons will be exquisitely sensitive to the timing of the inputs.

What does this have to do with consciousness? Instead of focusing on pedantic philosophical issues, Crick and Koch began with their naïve intuitions. "Consciousness" has many attributes—continuity in time, a sense of agency or free will, recursiveness or "self-awareness," etc. But one attribute that stands out is subjective unity: you experience all your diverse sense impressions, thoughts, willed actions and memories as being a unity—not jittery or fragmented. This attribute of consciousness, with the accompanying sense of the immediate "present" or "here and now," is so obvious that we don't usually think about it; we regard it as axiomatic.

So a central feature of consciousness is its unity—and here is a brain structure that sends and receives signals to and from practically all other brain structures, including the right parietal (involved in polysensory convergence and embodiment) and anterior cingulate (involved in the experience of "free will"). Thus the claustrum seems to unify everything anatomically, and consciousness does so mentally. Crick and Koch recognized that this may not be a coincidence: the claustrum may be central to consciousness; indeed it may embody the idea of the " Cartesian theater" that's taboo among philosophers—or is at least the conductor of the orchestra. This is this kind of childlike reasoning that often leads to great discoveries. Obviously, such analogies don't replace rigorous science, but they're a good place to start. Crick and Koch may be right or wrong, but their idea is elegant. If they're right, they've paved the way to solving one of the great mysteries of biology. Even if they're wrong, students entering the field would do well to emulate their style. Crick has been right too often to ignore.

I visited him at his home in La Jolla in July of 2004. He saw me to the door as I was leaving and as we parted, gave me a sly, conspiratorial wink: "I think it's the claustrum, Rama; it's where the secret is." A week later he passed away.

What's my favorite elegant idea? The elucidation of DNA's structure is surely the most obvious, but it bears repeating. I'll argue that the same strategy used to crack the genetic code might prove successful in cracking the "neural code" of consciousness and self. It's a long shot, but worth considering.

The ability to grasp analogies, and seeing the difference between deep and superficial ones, is a hallmark of many great scientists; Francis Crick and James Watson were no exception. Crick himself cautioned against the pursuit of elegance in biology, given that evolution proceeds happenstantially—"God is a hacker," he famously said, adding (according to my colleague Don Hoffman), "Many a young biologist has slit his own throat with Ockham's razor." Yet his own solution to the riddle of heredity ranks with natural selection as biology's most elegant discovery. Will a solution of similar elegance emerge for the problem of consciousness?

It is well known that Crick and Watson unraveled the double helical structure of the DNA molecule: two twisting complementary strands of nucleotides. Less well known is the chain of events culminating in this discovery.

First, Mendel's laws dictated that genes are particulate (a first approximation still held to be accurate). Then Thomas Morgan showed that fruit flies zapped with x-rays became mutants with punctate changes in their chromosomes, yielding the clear conclusion that the chromosomes are where the action is. Chromosomes are composed of histones and DNA; as early as 1928, the British bacteriologist Fred Griffith showed that a harmless species of bacterium, upon incubation with a heat-killed virulent species, actually changes into the virulent species! This was almost as startling as a pig walking into a room with a sheep and two sheep emerging. Later, Oswald Avery showed that DNA was the transformative principle here. In biology, knowledge of structure often leads to knowledge of function—one need look no further than the whole of medical history. Inspired by Griffith and Avery, Crick and Watson realized that the answer to the problem of heredity lay in the structure of DNA. Localization was critical, as, indeed, it may prove to be for brain function.

Crick and Watson didn't just describe DNA's structure, they explained its significance. They saw the analogy between the complementarity of molecular strands and the complementarity of parent and offspring—why pigs beget pigs and not sheep. At that moment modern biology was born.

I believe there are similar correlations between brain structure and mind function, between neurons and consciousness. I am stating the obvious here only because there are some philosophers, called "new mysterians," who believe the opposite. The erudite Colin McGinn has written, for instance, "The brain is only tangentially relevant to consciousness." ( There are many philosophers who would disagree, e.g. Churchland, Dennett, and Searle.)

After his triumph with heredity, Crick turned to what he called the "second great riddle" in biology—consciousness. There were many skeptics. I remember a seminar Crick was giving on consciousness at the Salk Institute here in La Jolla. He'd barely started when a gentleman in attendance raised a hand and said, "But Doctor Crick, you haven't even bothered to define the word consciousness before embarking on this." Crick's response was memorable: "I'd remind you that there was never a time in the history of biology when a bunch of us sat around the table and said, 'Let's first define what we mean by life.' We just went out there and discovered what it was—a double helix. We leave matters of semantic hygiene to you philosophers."

Crick did not, in my opinion, succeed in solving consciousness (whatever that might mean). Nonetheless, I believe he was headed in the right direction. He had been richly rewarded earlier in his career for grasping the analogy between biological complementarities, the notion that the structural logic of the molecule dictates the functional logic of heredity. Given his phenomenal success using the strategy of structure-function analogy, it is hardly surprising that he imported the same style of thinking to study consciousness. He and his colleague Christoff Koch did so by focusing on a relatively obscure structure called the claustrum.

The claustrum is a thin sheet of cells underlying the insular cortex of the brain, one on each hemisphere. It is histologically more homogeneous than most brain structures, and intriguingly, unlike most brain structures (which send and receive signals to and from a small subset of other structures), the claustrum is reciprocally connected with almost every cortical region. The structural and functional streamlining might ensure that, when waves of information come through the claustrum, its neurons will be exquisitely sensitive to the timing of the inputs.

What does this have to do with consciousness? Instead of focusing on pedantic philosophical issues, Crick and Koch began with their naïve intuitions. "Consciousness" has many attributes—continuity in time, a sense of agency or free will, recursiveness or "self-awareness," etc. But one attribute that stands out is subjective unity: you experience all your diverse sense impressions, thoughts, willed actions and memories as being a unity—not jittery or fragmented. This attribute of consciousness, with the accompanying sense of the immediate "present" or "here and now," is so obvious that we don't usually think about it; we regard it as axiomatic.

So a central feature of consciousness is its unity—and here is a brain structure that sends and receives signals to and from practically all other brain structures, including the right parietal (involved in polysensory convergence and embodiment) and anterior cingulate (involved in the experience of "free will"). Thus the claustrum seems to unify everything anatomically, and consciousness does so mentally. Crick and Koch recognized that this may not be a coincidence: the claustrum may be central to consciousness; indeed it may embody the idea of the " Cartesian theater" that's taboo among philosophers—or is at least the conductor of the orchestra. This is this kind of childlike reasoning that often leads to great discoveries. Obviously, such analogies don't replace rigorous science, but they're a good place to start. Crick and Koch may be right or wrong, but their idea is elegant. If they're right, they've paved the way to solving one of the great mysteries of biology. Even if they're wrong, students entering the field would do well to emulate their style. Crick has been right too often to ignore.

I visited him at his home in La Jolla in July of 2004. He saw me to the door as I was leaving and as we parted, gave me a sly, conspiratorial wink: "I think it's the claustrum, Rama; it's where the secret is." A week later he passed away.

"We Are Dreaming Machines That Construct Virtual Models Of The Real World"

The most beautiful and elegant explanation should be as strong and overwhelming as a brick smashing your head; it should break your life in two. For instance, as a result of that explanation, you should realize that even if you are dreaming your brain is active doing what he does best: creating models of reality or, in fact, creating the reality where you live in.

Descartes was aware of this fact and that's why he concluded "I think, therefore I am", cogito ergo sum. You can think of yourself as walking on a park, but this could be just a vivid dream. Therefore, it's not possible to conclude anything about your existence based on the apparent fact of walking. However, if you are really walking on a park, or dreaming, you are thinking, therefore existing. Dreaming is so similar to waking, that you can't trust any sensory information as proof of your existence. You can only trust the fact of thinking or, in contemporary words, the fact that your brain is active.

Dreaming and waking are similar cognitive states, as Rodolfo Llinás says in his masterpiece "I of the vortex". The only difference is that while dreaming, your brain is not perceiving or representing the external reality, it is emulating it and providing self-generated inputs.

The explanation is also shocking in its consequence. While waking we are also dreaming, concludes Llinás: "The waking state is a dreamlike state (…) guided and shaped by the senses, whereas regular dreaming does not involve the senses at all".

In both cases our brain generates models of reality.

With this explanation very few entities—the brain and the matter of reality—are enough to remind us how we create what is usually defined as "reality": "The only reality that exists for us is already a virtual one (…). We are basically dreaming machines that construct virtual models of the real world", says Llinás.

This is not only a beautiful explanation because of the poetic fact that reality is self-generated while dreaming, and partially generated while waking. Is there anything more beautiful than understanding how to create reality?

This is not only an elegant explanation because it shows our minuscule and entirely representative place in the ontological and physical reality, in the huge amount of matter defined as universe.

This explanation is overwhelming in practical terms because as a philosopher and social scientist, I cannot explain the physical or the social reality without considering that we live and move in a model of reality. Including the representational, creative and even ontological role of the brain, is a naturalization project usually omitted as a result of hyper-positivism and scientific fragmentation. From Descartes to Llinás, form the understanding of galaxies to the understanding of crime, this explanation should be relevant in most scientific enterprises.

The most beautiful and elegant explanation should be as strong and overwhelming as a brick smashing your head; it should break your life in two. For instance, as a result of that explanation, you should realize that even if you are dreaming your brain is active doing what he does best: creating models of reality or, in fact, creating the reality where you live in.

Descartes was aware of this fact and that's why he concluded "I think, therefore I am", cogito ergo sum. You can think of yourself as walking on a park, but this could be just a vivid dream. Therefore, it's not possible to conclude anything about your existence based on the apparent fact of walking. However, if you are really walking on a park, or dreaming, you are thinking, therefore existing. Dreaming is so similar to waking, that you can't trust any sensory information as proof of your existence. You can only trust the fact of thinking or, in contemporary words, the fact that your brain is active.

Dreaming and waking are similar cognitive states, as Rodolfo Llinás says in his masterpiece "I of the vortex". The only difference is that while dreaming, your brain is not perceiving or representing the external reality, it is emulating it and providing self-generated inputs.

The explanation is also shocking in its consequence. While waking we are also dreaming, concludes Llinás: "The waking state is a dreamlike state (…) guided and shaped by the senses, whereas regular dreaming does not involve the senses at all".

In both cases our brain generates models of reality.

With this explanation very few entities—the brain and the matter of reality—are enough to remind us how we create what is usually defined as "reality": "The only reality that exists for us is already a virtual one (…). We are basically dreaming machines that construct virtual models of the real world", says Llinás.

This is not only a beautiful explanation because of the poetic fact that reality is self-generated while dreaming, and partially generated while waking. Is there anything more beautiful than understanding how to create reality?

This is not only an elegant explanation because it shows our minuscule and entirely representative place in the ontological and physical reality, in the huge amount of matter defined as universe.

This explanation is overwhelming in practical terms because as a philosopher and social scientist, I cannot explain the physical or the social reality without considering that we live and move in a model of reality. Including the representational, creative and even ontological role of the brain, is a naturalization project usually omitted as a result of hyper-positivism and scientific fragmentation. From Descartes to Llinás, form the understanding of galaxies to the understanding of crime, this explanation should be relevant in most scientific enterprises.

Like Attracts Like

The beauty of this explanation is twofold. First, it accounts for the complex organization of the cerebral cortex (the most recent evolutionary component of the brain) using a very simple rule. Second, it deals with scaling issues very well, and indeed it also accounts for a specific phenomenon in a widespread human behavior, imitation. It explains how neurons packed themselves in the cerebral cortex and how humans relate to each other. Not a small feat.

Let's start from the brain. The idea that neurons with similar properties cluster together is theoretically appealing, because it minimizes costs associated with transmission of information. This idea is also supported by empirical evidence (it does not always happen that a theoretically appealing idea is supported by empirical data, sadly). Indeed, more than a century of a variety of brain mapping techniques demonstrated the existence of 'visual cortex' (here we find neurons that respond to visual stimuli), 'auditory cortex' (here we find neurons that respond to sounds), 'somatosensory cortex' (here we find neurons that respond to touch), and so forth. When we zoom in and look in detail at each type of cortex, we also find that the 'like attracts like' principle works well. The brain forms topographic maps. For instance, let's look at the 'motor cortex' (here we find neurons that send signals to our muscles so that we can move our body, walk, grasp things, move the eyes and explore the space surrounding us, speak, and obviously type on a keyboard, as I am doing now). In the motor cortex there is a map of the body, with neurons sending signals to hand muscles clustering together and being separate from neurons sending signals to feet or face muscles. So far, so good.

In the motor cortex, however, we also find multiple maps for the same body part (for instance, the hand). Furthermore, these multiple maps are not adjacent. What is going here? It turns out that body parts are only one of the variables that are mapped by the motor cortex. Other important variables are, for instance, different types of coordinated actions and the space sector in which the action ends. The coordinated actions that are mapped by the motor cortex belong to a number of categories, most notably defensive actions (that is, actions to defend one's own body) hand to mouth actions (important to eat and drink!), manipulative actions (using skilled finger movements to manipulate objects). The problem here is that there are multiple dimensions that are mapped onto a two-dimensional entity (we can flatten the cerebral cortex and visualize it as a surface area). This problem needs to be solved with a process of dimensionality reduction. Computational studies have shown that algorithms that do dimensionality reduction while optimizing the similarity of neighboring points (our 'like attracts like' principle) produce maps that reproduce well the complex, somewhat fractured maps described by empirical studies of the motor cortex. Thus, the principle of 'like attracts like' seems working well even when multiple dimensions must be mapped onto a two-dimensional entity (our cerebral cortex).

Let's move to human behavior. Imitation in humans is widespread and often automatic. It is important for learning and transmission of culture. We tend to align our movements (and even words!) during social interactions without even realizing it. However, we don't imitate other people in an equal way. Perhaps not surprisingly, we tend to imitate more people that are like us. Soon after birth, infants prefer faces of their own race and respond more receptively to strangers of their own race. Adults make education and even career choices that are influenced by models of their own race. This is a phenomenon called self similarity bias. Since imitation increases liking, the self similarity bias most likely influences our social preferences too. We tend to imitate others that are like us, and by doing that, we tend to like those people even more. From neurons to people, the very simple principle of 'like attracts like' has a remarkable explanatory power. This is what an elegant scientific explanation is supposed to do. To explain a lot in a simple way.

The beauty of this explanation is twofold. First, it accounts for the complex organization of the cerebral cortex (the most recent evolutionary component of the brain) using a very simple rule. Second, it deals with scaling issues very well, and indeed it also accounts for a specific phenomenon in a widespread human behavior, imitation. It explains how neurons packed themselves in the cerebral cortex and how humans relate to each other. Not a small feat.

Let's start from the brain. The idea that neurons with similar properties cluster together is theoretically appealing, because it minimizes costs associated with transmission of information. This idea is also supported by empirical evidence (it does not always happen that a theoretically appealing idea is supported by empirical data, sadly). Indeed, more than a century of a variety of brain mapping techniques demonstrated the existence of 'visual cortex' (here we find neurons that respond to visual stimuli), 'auditory cortex' (here we find neurons that respond to sounds), 'somatosensory cortex' (here we find neurons that respond to touch), and so forth. When we zoom in and look in detail at each type of cortex, we also find that the 'like attracts like' principle works well. The brain forms topographic maps. For instance, let's look at the 'motor cortex' (here we find neurons that send signals to our muscles so that we can move our body, walk, grasp things, move the eyes and explore the space surrounding us, speak, and obviously type on a keyboard, as I am doing now). In the motor cortex there is a map of the body, with neurons sending signals to hand muscles clustering together and being separate from neurons sending signals to feet or face muscles. So far, so good.

In the motor cortex, however, we also find multiple maps for the same body part (for instance, the hand). Furthermore, these multiple maps are not adjacent. What is going here? It turns out that body parts are only one of the variables that are mapped by the motor cortex. Other important variables are, for instance, different types of coordinated actions and the space sector in which the action ends. The coordinated actions that are mapped by the motor cortex belong to a number of categories, most notably defensive actions (that is, actions to defend one's own body) hand to mouth actions (important to eat and drink!), manipulative actions (using skilled finger movements to manipulate objects). The problem here is that there are multiple dimensions that are mapped onto a two-dimensional entity (we can flatten the cerebral cortex and visualize it as a surface area). This problem needs to be solved with a process of dimensionality reduction. Computational studies have shown that algorithms that do dimensionality reduction while optimizing the similarity of neighboring points (our 'like attracts like' principle) produce maps that reproduce well the complex, somewhat fractured maps described by empirical studies of the motor cortex. Thus, the principle of 'like attracts like' seems working well even when multiple dimensions must be mapped onto a two-dimensional entity (our cerebral cortex).

Let's move to human behavior. Imitation in humans is widespread and often automatic. It is important for learning and transmission of culture. We tend to align our movements (and even words!) during social interactions without even realizing it. However, we don't imitate other people in an equal way. Perhaps not surprisingly, we tend to imitate more people that are like us. Soon after birth, infants prefer faces of their own race and respond more receptively to strangers of their own race. Adults make education and even career choices that are influenced by models of their own race. This is a phenomenon called self similarity bias. Since imitation increases liking, the self similarity bias most likely influences our social preferences too. We tend to imitate others that are like us, and by doing that, we tend to like those people even more. From neurons to people, the very simple principle of 'like attracts like' has a remarkable explanatory power. This is what an elegant scientific explanation is supposed to do. To explain a lot in a simple way.

Sexual Conflict Theory

A fascinating parallel has occurred in the history of the traditionally separate disciplines of evolutionary biology and psychology. Biologists historically viewed reproduction as an inherently cooperative venture. A male and female would couple for the shared goal of reproduction of mutual offspring. In psychology, romantic harmony was presumed to be the normal state. Major conflicts within romantic couples were and still are typically seen as signs of dysfunction. A radical reformulation embodied by sexual conflict theory changes these views.

Sexual conflict occurs whenever the reproductive interests of an individual male and individual female diverge, or more precisely when the "interests" of genes inhabiting individual male and female interactants diverge. Sexual conflict theory defines the many circumstances in which discord is predictable and entirely expected.

Consider deception on the mating market. If a man is pursuing a short-term mating strategy and the woman for whom he has sexual interest is pursuing a long-term mating strategy, conflict between these interactants is virtually inevitable. Men are known to feign long-term commitment, interest, or emotional involvement for the goal of casual sex, interfering with women's long-term mating strategy. Men's have evolved sophisticated strategies of sexual exploitation. Conversely, women sometimes present themselves as costless sexual opportunities, and then intercalate themselves into a man's mating mind to such a profound degree that he wakes up one morning and suddenly realizes that he can't live without her—one version of the ‘bait and switch' tactic in women's evolved arsenal.

Once coupled in a long-term romantic union, a man and a woman often still diverge in their evolutionary interests. A sexual infidelity by the woman might benefit her by securing superior genes for her progeny, an event that comes with catastrophic costs to her hapless partner who unknowingly devotes resources to a rival's child. From a woman's perspective, a man's infidelity risks the diversion of precious resources to rival women and their children. It poses the danger of losing the man's commitment entirely. Sexual infidelity, emotional infidelity, and resource infidelity are such common sources of sexual conflict that theorists have coined distinct phrases for each.

But all is not lost. As evolutionist Helena Cronin has eloquently noted, sexual conflict arises in the context of sexual cooperation. The evolutionary conditions for sexual cooperation are well-specified: When relationships are entirely monogamous; when there is zero probability of infidelity or defection; when the couple produces offspring together, the shared vehicles of their genetic cargo; and when joint resources cannot be differentially channeled, such as to one set of in-laws versus another.

These conditions are sometimes met, leading to great love and harmony between a man and a woman. The prevalence of deception, sexual coercion, stalking, intimate partner violence, murder, and the many forms of infidelity reveal that conflict between the sexes is ubiquitous. Sexual conflict theory, a logical consequence of modern evolutionary genetics, provides the most beautiful theoretical explanation for these darker sides of human sexual interaction.

A fascinating parallel has occurred in the history of the traditionally separate disciplines of evolutionary biology and psychology. Biologists historically viewed reproduction as an inherently cooperative venture. A male and female would couple for the shared goal of reproduction of mutual offspring. In psychology, romantic harmony was presumed to be the normal state. Major conflicts within romantic couples were and still are typically seen as signs of dysfunction. A radical reformulation embodied by sexual conflict theory changes these views.

Sexual conflict occurs whenever the reproductive interests of an individual male and individual female diverge, or more precisely when the "interests" of genes inhabiting individual male and female interactants diverge. Sexual conflict theory defines the many circumstances in which discord is predictable and entirely expected.

Consider deception on the mating market. If a man is pursuing a short-term mating strategy and the woman for whom he has sexual interest is pursuing a long-term mating strategy, conflict between these interactants is virtually inevitable. Men are known to feign long-term commitment, interest, or emotional involvement for the goal of casual sex, interfering with women's long-term mating strategy. Men's have evolved sophisticated strategies of sexual exploitation. Conversely, women sometimes present themselves as costless sexual opportunities, and then intercalate themselves into a man's mating mind to such a profound degree that he wakes up one morning and suddenly realizes that he can't live without her—one version of the ‘bait and switch' tactic in women's evolved arsenal.

Once coupled in a long-term romantic union, a man and a woman often still diverge in their evolutionary interests. A sexual infidelity by the woman might benefit her by securing superior genes for her progeny, an event that comes with catastrophic costs to her hapless partner who unknowingly devotes resources to a rival's child. From a woman's perspective, a man's infidelity risks the diversion of precious resources to rival women and their children. It poses the danger of losing the man's commitment entirely. Sexual infidelity, emotional infidelity, and resource infidelity are such common sources of sexual conflict that theorists have coined distinct phrases for each.

But all is not lost. As evolutionist Helena Cronin has eloquently noted, sexual conflict arises in the context of sexual cooperation. The evolutionary conditions for sexual cooperation are well-specified: When relationships are entirely monogamous; when there is zero probability of infidelity or defection; when the couple produces offspring together, the shared vehicles of their genetic cargo; and when joint resources cannot be differentially channeled, such as to one set of in-laws versus another.

These conditions are sometimes met, leading to great love and harmony between a man and a woman. The prevalence of deception, sexual coercion, stalking, intimate partner violence, murder, and the many forms of infidelity reveal that conflict between the sexes is ubiquitous. Sexual conflict theory, a logical consequence of modern evolutionary genetics, provides the most beautiful theoretical explanation for these darker sides of human sexual interaction.

An Explanation of Fundamental Particle Physics That Doesn't Exist Yet

My favorite explanation is one that does not yet exist.

Research in fundamental particle physics has culminated in our current Standard Model of elementary particles. Using ever larger machines, we have been able to identify and determine the properties of a whole zoo of elementary particles. These properties present many interesting patterns. All the matter we see around us is composed of electrons and up and down quarks, interacting differently with photons of electromagnetism, W and Z bosons of the weak force, gluons of the strong force, and gravity, according to their different values and kinds of charges. Additionally, an interaction between a W and an electron produces an electron neutrino, and these neutrinos are now known to permeate space—flying through us in great quantities, interacting only weakly. A neutrino passing through the earth probably wouldn't even notice it was there. Together, the electron, electron neutrino, and up and down quarks constitute what is called the first generation of fermions. Using high energy particle colliders, physicists have been able to see even more particles. It turns out the first generation fermions have second and third generation partners, with identical charges to the first but larger masses. And nobody knows why. The second generation partner to the electron is called the muon, and the third generation partner is called the tau. Similarly, the down quark is partnered with the strange and bottom quarks, and the up quark has partners called the charm and top, with the top discovered in 1995. Last and least, the electron neutrinos are partnered with muon and tau neutrinos. All of these fermions have different masses, arising from their interaction with a theorized background Higgs field. Once again, nobody knows why there are three generations, or why these particles have the masses they do. The Standard Model, our best current description of fundamental physics, lacks a good explanation.