A Momentary Flow

Rebuilding worldviews one world at a time

Izvrstan blog koji skuplja/reblogira ideje i informacije iz tehno-neuro-medijsko-metafizičke fizike suvremenog (i budućeg) života.

http://wildcat2030.tumblr.com/

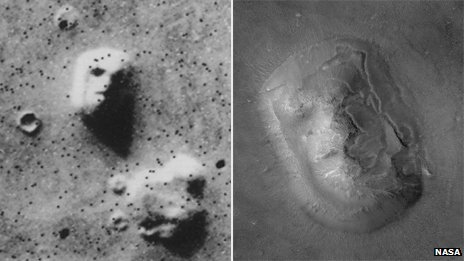

People have long seen faces in the Moon, in oddly-shaped vegetables and even burnt toast, but a Berlin-based group is scouring the planet via satellite imagery for human-like features. What’s behind our desire to see faces in our surroundings, asks Lauren Everitt.

Most people have never heard of pareidolia. But nearly everyone has experienced it.

Anyone who has looked at the Moon and spotted two eyes, a nose and a mouth has felt the pull of pareidolia.

It’s “the imagined perception of a pattern or meaning where it does not actually exist”, according to the World English Dictionary. It’s picking a face out of a knotted tree trunk or finding zoo animals in the clouds.

German design studio Onformative is undertaking perhaps the world’s largest and most systematic search for pareidolia. Their Google Faces programme will spend the next few months sniffing out face-like shapes in Google Maps. (via BBC News - Pareidolia: Why we see faces in hills, the Moon and toasties)

We’re all mad here…

Mindscapes: First interview with a dead man - health - 23 May 2013 - New Scientist

See on Scoop.it - cognitionCotard’s syndrome is the belief that your brain or body has died. New Scientist has the first media interview with someone who has come out the other sideFastTFriend’s insight:The power of metaphor: Graham’s belief “was a metaphor for how he felt about the world – his experiences no longer moved him. He felt he was in a limbo state caught between life and death”.

See on newscientist.com

No, Mermaids Do Not Exist

What Animal Planet’s fake documentaries don’t tell you about the ocean.

-

This week, Animal Planet aired two fake documentaries claiming to show scientific evidence of mermaids. I say “fake documentaries” because that’s exactly what The Body Found and The New Evidence are. The “scientists” interviewed in the show are actors, and there’s a brief disclaimer during the end credits. However, the Twitter conversation surrounding the show (#Mermaids) reveals that many viewers are unaware that the show isn’t real. (Sample Tweets: “After watching the documentary #Mermaids the body found … I believe there are mermaids!!!” and “90% of the ocean is unexplored and you’re telling me #mermaids don’t exist”—which has been retweeted more than 800 times.) It is, after all, airing on a network that claims to focus on educating viewers about the natural world. “The Body Found” was rightfully described “the rotting carcass of science television,” and I was shocked to see Animal Planet air a sequel.

As a marine biologist, I can tell you unequivocally that despite millennia of humans exploring the ocean, no credible evidence of the existence of mermaids has ever been found. Some claim that manatees are the source of the legend, but you’d have to be at sea an awfully long time to think that a manatee is a beautiful woman. Sure, new species are discovered all the time, but while a new species of bird or insect is fascinating, it doesn’t mean “anything is possible,” and it is certainly not equivalent to finding a group of talking, thinking humanoids with fish tails covering half of their bodies. The confusion generated by “The Body Found” got to be so significant that the United States government issued an official statement on the matter. (via Mermaids aren’t real: Animal Planet’s fake documentaries misrepresent ocean life. - Slate Magazine)

Motorola shows off insane electronic tattoo and ‘vitamin authentication’ prototype wearables

-

At the D11 conference today, Regina Dugan, SVP for advanced technology and projects at Motorola, showed off some advanced projects. The first was a prototype electronic tattoo on her arm from MC10, about which she quipped “teenagers might not want to wear a watch, but you can be sure they’ll wear a tattoo just to piss of their parents.”

The second technology was even wilder: a pill from Proteus Digital Health that you can swallow and which is then powered by the acid in your stomach. Once ingested, it creates an 18-bit signal in your body — and thereby makes your entire person an “authentication token.” Dugan called it “vitamin authentication.” Motorola CEO Dennis Woodside, who earlier confirmed the Moto X phone, added that the Proteus pill was approved by the FDA. (via Motorola shows off insane electronic tattoo and ‘vitamin authentication’ prototype wearables | The Verge)

Art appreciation is measureable | Science Codex

Have you experienced seeing a painting or a play that has left you with no feelings whatsoever, whilst a friend thought it was beautiful and meaningful? Experts have argued for years about the feasibility of researching art appreciation, and what should be taken into consideration.

Neuroscientists believe that biological processes that take place in the brain decide whether one likes a work of art or not. Historians and philosophers say that this is far too narrow a viewpoint. They believe that what you know about the artist’s intentions, when the work was created, and other external factors, also affect how you experience a work of art.

Building bridges

A new model that combines both the historical and the psychological approach has been developed. -

We think that both traditions are just as important, although incomplete. We want to show that they complement each other, says Rolf Reber, Professor of Psychology at the University of Bergen, Norway. Together with Nicolas Bullot, Doctor of Philosophy at the Macquarie University in Australia, he has developed a new model to help us understand art appreciation. The results have been published in ‘Behavioral and Brain Sciences’ and are commented on by 27 scientists from different disciplines.

Neuroscientists often measure brain activity to find out how much a testee likes a work of art, without investigating whether he or she actually understands the work. This is insufficient, as artistic understanding also affects assessment, says Reber.

Eye-opening experience -

We know from earlier research that a painting that is difficult – yet possible – to interpret, is felt to be more meaningful than a painting that one looks at and understands immediately. The painter, Eugène Delacroix, made use of this fact to depict war. Joseph Mallord William Turner did the same in ‘Snow storm’. When you have to struggle to understand, you can have an eye-opening experience, which the brain appreciates, explains Reber.

He hopes that other scientists will use the Australian-Norwegian model. - By measuring brain activity, interviewing test persons about thoughts and reactions, and charting their artistic knowledge, it’s possible to gain new and exciting insight into what makes people appreciate good works of art. The model can be used for visual art, music, theatre and literature, says Reber. Source: The University of Bergen

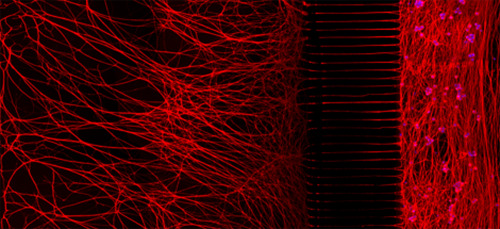

Distinguishing Brain From Mind

-

In coming years, neuroscience will answer questions we don’t even yet know to ask. Sometimes, though, focus on the brain is misleading.

-

From the recent announcement of President Obama’s BRAIN Initiative to the Technicolor brain scans (“This is your brain on God/love/envy etc”) on magazine covers all around, neuroscience has captured the public imagination like never before.

Understanding the brain is of course essential to developing treatments for devastating illnesses like schizophrenia and Parkinson’s. More abstract but no less compelling, the functioning of the brain is intimately tied to our sense of self, our identity, our memories and aspirations. But the excitement to explore the brain has spawned a new fixation that my colleague Scott Lilienfeld and I call neurocentrism — the view that human behavior can be best explained by looking solely or primarily at the brain.

Sometimes the neural level of explanation is appropriate. When scientists develop diagnostic tests or a medications for, say, Alzheimer’s disease, they investigate the hallmarks of the condition: amyloid plaques that disrupt communication between neurons, and neurofibrillary tangles that degrade them.

Other times, a neural explanation can lead us astray. In my own field of addiction psychiatry, neurocentrism is ascendant — and not for the better. Thanks to heavy promotion by the National Institute on Drug Abuse, part of the National Institutes of Health, addiction has been labeled a “brain disease.” (via Distinguishing Brain From Mind - Sally Satel - The Atlantic)

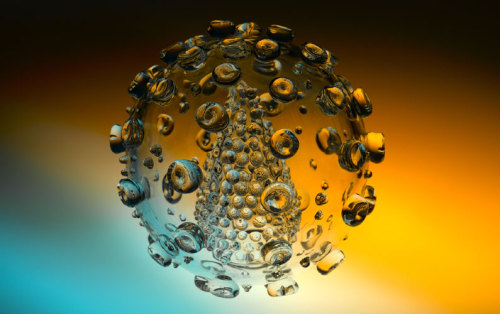

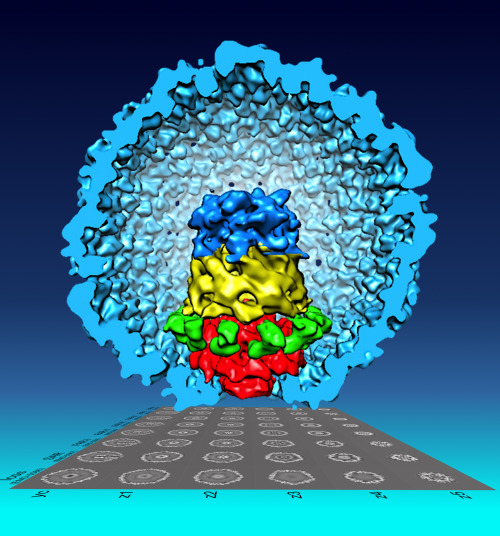

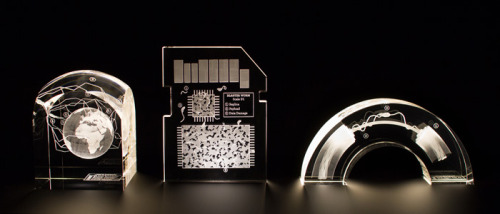

The above image shows a glass sculpture of a HIV particle. It was made by UK artist Luke Jerram, who received this letter in response from an anonymous fan in 2009:Dear Luke,

I just saw a photo of your glass sculpture of HIV.

I can’t stop looking at it. Knowing that millions of those guys are in me, and will be a part of me for the rest of my life. Your sculpture, even as a photo, has made HIV much more real for me than any photo or illustration I’ve ever seen. It’s a very odd feeling seeing my enemy, and the eventual likely cause of my death, and finding it so beautiful.

Thankyou.

“Knowmads are explorers of the uncertain, the indeterminate, the ambiguous, the oscillating and by consequence the disruptive”

A must watch! portraying what the future of tech feels like.. (W)

DJ Fresh & Mindtunes: A track created only by the mind

-

Published on May 29, 2013

Download the track “Mindtunes” here: http://bit.ly/10zvhvi. Proceeds go directly to the Queen Elizabeth’s Foundation for disabled people. Find out more about QEF on http://qef.org.uk/

Mindtunes is a track created by Andy, Jo and Mark, 3 physically disabled music fans, using only one instrument: their mind. The track was produced by DJ Fresh. Watch the music video: http://bit.ly/18tDipD

At Smirnoff we believe there’s a creator in everyone of us. All you need are the means to get it out there, for everyone to see.

You have a mind, you can create. #yoursforthemaking

Special thanks to guest member Matthew.

Made with Emotiv EPOC technology.

Mindtunes is a track created by Andy, Jo and Mark, 3 physically disabled music fans, using only one instrument: their mind. The track was produced by DJ Fresh. Watch the music video: http://bit.ly/18tDipD

At Smirnoff we believe there’s a creator in everyone of us. All you need are the means to get it out there, for everyone to see.

You have a mind, you can create. #yoursforthemaking

Special thanks to guest member Matthew.

Made with Emotiv EPOC technology.

(Documentary) (by SmirnoffEurope)

“Don’t explain your philosophy. Embody it.”

(via loveyourchaos)

Carnegie Mellon tracking algorithm inspired by Harry Potter’s Marauder’s map (w/ video)

(Phys.org) —Researchers from Carnegie Mellon have developed a solution for finding people through computer analysis making use of facial recognition, color matching and location tracking With homage to the fictional map used by Harry Potter, they came up with a solution that can effectively track people in the real world just like The Marauder’s Map locates and tracks people in Harry Potter’s magical world. Security camera footage across a network of cameras can be analyzed via an algorithm that combines facial recognition, color matching of clothing, and a person’s expected position based on last known location.

In designing the map, Shoou-I Yu, a PhD student at Carnegie Mellon University, who has been working on multi-object tracking in multi-camera environments for surveillance scenarios, sought to take on the challenge of finding and following individuals in complex indoor environments where walls and furniture may obstruct views. He and his team found a solution by combining several tracking techniques.

.

See on phys.org

IT’S A creepy sensation, having a little keyboard permanently emblazoned on the back of my hand. No matter what I do – shake my hand or wave it – I can’t make it go away. Creepier still are the soft tingles on my skin as each “key” is pressed, like a low-frequency electronic buzz.

I am in Masatoshi Ishikawa’s lab at the University of Tokyo, Japan, where the latest in interactive projections is being demonstrated for the first time. The set-up is in two parts: one is a projection that beams the outline of computer keyboards or cellphone keys onto any object, such as your hand.

Projecting interactive outlines of devices has been done beforeMovie Camera, but it is a lot trickier doing it on a moving object. Ishikawa’s system detects and maps the position of an object 500 times per second and projects an image onto it. It automatically controls a camera’s pan and tilt angles to ensure it is locked onto an object no matter how fast it moves. Ishikawa once demonstrated this technology by tracking a table-tennis ball in play.

The set-up also has a tactile angle. The information about the exact location of your hand and the orientation of the projected keypad is fed to a second system, called the Airborne Ultrasound Tactile Display. This makes the illuminated points on your hand or body slightly tingle with what feels like a jet of air. It’s an odd feeling, but I find it makes the projected image seem more real.

The sensation on the hand is generated by sound beamed by 2000 or so ultrasonic wave emitters, says its developer Hiroyuki Shinoda, also at the University of Tokyo. The carefully timed and directed sound beams can make any spot vibrate within a given cubic metre.

The combined system has already attracted interest from the auto, medical and gaming industries, says Ishikawa. (via Tingly projections make beamed gadgets come alive - tech - 30 May 2013 - New Scientist)

Personal clouds let you take control of your own data - tech - 31 May 2013 - New Scientist

A startup that lets you have your own cloud servers at home is part of a movement that is turning its back on conventional cloud computing

-

IS THIS the death of the cloud as we know it? Space Monkey certainly seems to think so – it is planning to build a better one. When its Kickstarter campaign ended last week, the startup had received more than three times its $100,000 funding target.

Set to launch in the next few months, Space Monkey aims to replace public cloud service providers such as Dropbox and Google Drive with a cloud of thousands of devices that sit in our homes. For a monthly fee, Space Monkey will lease subscribers a device containing a 2-terabyte hard drive and software that connects to all other Space Monkey devices on the internet.

Only half the storage space is for you – the rest is filled with other subscribers’ data. Everything stored on a Space Monkey device is copied and split into many encrypted pieces distributed over the network. If you want to watch one of your videos away from home, it will be put together from the pieces copied onto devices closest to your current location. It is much like torrent downloads from file-sharing websites, which assemble fragments of a file from different machines on a peer-to-peer network.

Space Monkey claims that its cloud will give users upload and download speeds that are 12 times as fast as those offered by existing services – and as the network of subscribers grows the rate could be 60 times faster. “Each new user adds bandwidth,” says co-founder Alen Peacock.

See on newscientist.com

“

In a 2005 article, The Economist noted astutely that:

..the digital divide is not a problem in itself, but a symptom of deeper, more important divides: of income, development and literacy[…] So even if it were possible to wave a magic wand and cause a computer to appear in every household on earth, it would not achieve very much: a computer is not useful if you have no food or electricity and cannot read.

”

Our Equal Future: Does Technology Hold the Key to a Flatter World?

Far from making the world more fair, technology serves to reinforce, and perhaps even increase, inequalities.

-

Over the past two weeks, I, along with millions of other Trekkers, sat in a dark, air-conditioned theater and met once again the crew of the Enterprise, as I’ve done many times over the last few years over Netflix and on various hotel-room TVs. But the more I’ve watched, the more I’ve been I’ve been visited by a nagging feeling that the whole concept is even more escapist than even its sci-fi veneer would suggest. But what could possibly exceed warp speed in imagination? Aren’t holodecks already the pinnacle of escapism?

It was during the latest movie that I was able to put my finger on it. Much of the film takes place circa 2259 AD on Earth, and something clicked as the villain John Harrison gallivanted from one action sequence to another through the streets of London and San Francisco: In Star Trek, our planet is full of sky-bound towers and gleaming architecture, but unlike the darker futures of Robocop or Blade Runner, there are no slums. In the world envisioned by Gene Roddenberry, the poor are no longer with us.

..Flattening, democratizing, leveling…these are words frequently associated with digital technologies — but is that what they really do?

Is modafinil safe in the long term?

The media is full of stories about the amazing properties of smart drugs. But you could be putting your brain at risk, warns David Cox

-

Modafinil has emerged as the crown prince of smart drugs, that seductive group of pharmaceutical friends that promise enhanced memory, motivation, and an unrelenting ability to focus, all for hours at a time.

In the absence of long-term data, the media, particularly the student media, has tended to be relaxed about potential side-effects. The Oxford Tab, for example, simply shrugs: Who cares?

The novelist MJ Hyland, who suffers from multiple sclerosis, wrote a paean to the drug in the Guardian recently – understandably, for her, any potential side-effects are worth the risk given the benefits she’s experienced.

But should stressed students, tempted by a quick fix, be worried about what modafinil could be doing their brains in the long term?

Professor Barbara Sahakian, at the University of Cambridge, has been researching modafinil as a possible clinical treatment for the cognitive problems of patients with psychosis. She’s fascinated by healthy people taking these drugs and has co-authored a recent book on the subject.

“Some people just want the competitive edge – they want to do better at exams so they can get into a better university or get a better degree. And there’s another group of people who want to function the best they can all the time. But people have also told me that they’ve used these drugs to help them do tasks that they’ve found not very interesting, or things they’ve been putting off.”

See on guardian.co.uk

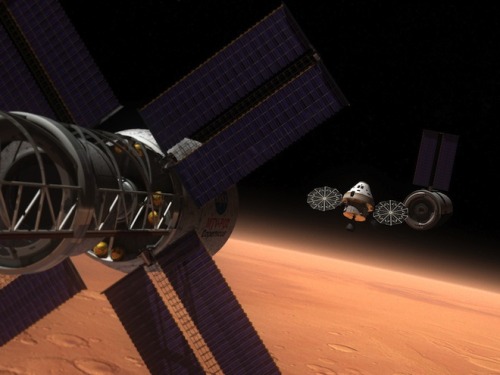

While humans have dreamed about going to Mars practically since it was discovered, an actual mission in the foreseeable future is finally starting to feel like a real possibility.

But how real is it? NASA says it’s serious about one day doing a manned mission while private companies are jockeying to present ever-more audacious plans to get there. And equally important, public enthusiasm for the Red Planet is riding high after the Curiosity rover’s spectacular landing and photo-rich mission.

Earlier this month, scientists, NASA officials, private space company representatives and other members of the spaceflight community gathered in Washington D.C. for three days to discuss all the challenges at the Humans to Mars (H2M) conference, hosted by the spaceflight advocacy group Explore Mars, which has called for a mission that would send astronauts in the 2030s.

But the Martian dust devil is in the details, and there is still one big problem: We currently lack the technology to get people to Mars and back. An interplanetary mission of that scale would likely be one of the most expensive and difficult engineering challenges of the 21st century.

“Mars is pretty far away,” NASA’s director of the International Space Station, Sam Scimemi said during the H2M conference. “It’s six orders of magnitude further than the space station. We would need to develop new ways to live away from the Earth and that’s never been done before. Ever.” (via Why We Can’t Send Humans to Mars Yet (And How We’ll Fix That) | Wired Science | Wired.com)

MIT Savant Can Predict How Many Re-Tweets You’ll Get | Wired Enterprise | Wired.com

Tauhid Zaman. Photo: Tauhid Zaman It doesn’t matter if you’re Justin Bieber or James Baker. On Twitter, the first few minutes tell the whole story

-

It doesn’t matter if you’re Justin Bieber or James Baker. On Twitter, the first few minutes tell the whole story.

In fact, MIT Sloan Professor Tauhid Zaman has figured out a way to predict how many retweets a Twitter message will receive, just based on what happens in the first few minutes of its life.

“If a Tweet goes up, I can actually forecast ahead of time how many people are going to retweet it,” he says.

He can do this because pretty much all Twitter messages have the same lifecycle, and that can be mapped on a graph. Zaman wants to build a website that will analyze Tweets in real-time and make predictions about their popularity, but in the meantime, he’s set up a website that graphs the number of retweets that followed messages from people like Will.i.am, Newt Gingrich, and Kim Kardashian. He calls it the Twouija, a nod to the old Ouija board game that purports to predict your future.

The predictions are far from perfect. For example, Zaman took a look at Kim Kardashian’s April 15, 2012 message: “Happy Sunday tweeps! Have a blessed day!” After watching the retweets for five minutes, he predicted it would get 555 retweets within a few hours. In fact, it got 709. That’s off by 30 percent, though it’s not bad.

But you can see each of the graphs following the same pattern: there are a lot of retweets during the first few minutes, and then this gradually tapers off in a somewhat predictable way.

“It doesn’t matter if you’re a bigshot or a nobody,” Zaman says. “If you’re going to get anything you’re going to get it in the first couple of hours, and this is the way you get it. It’s going to evolve like the kind of curve you see in Twouija.”

.

See on wired.com

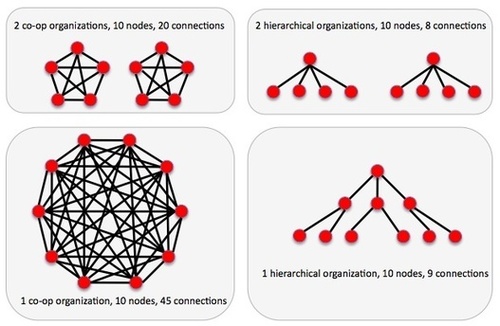

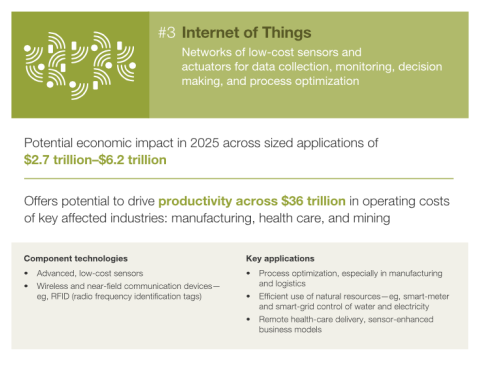

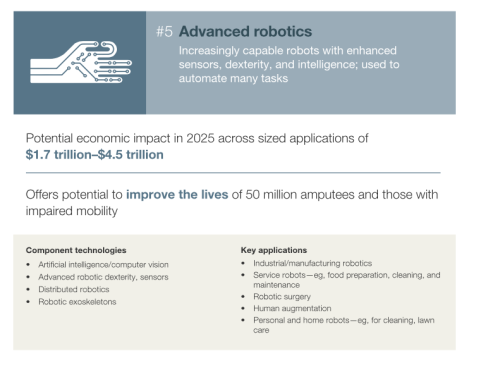

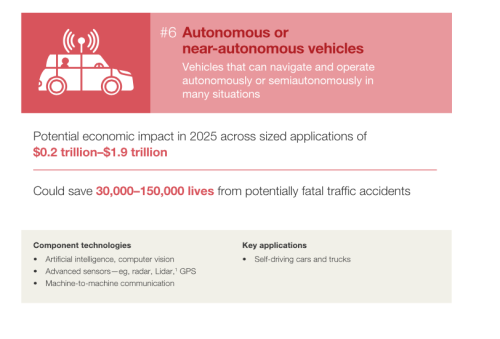

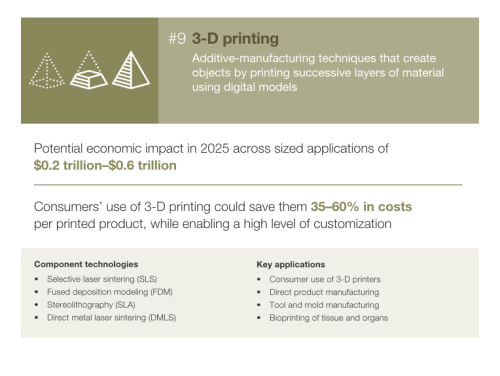

McKinsey publishes “Gallery of Disruptive Technologies”McKinsey & Company have published a comprehensive white paper and visual slideshow gallery on the 12 most advanced disruptive technologies that will change our lives.I have highlighted some of these technologies which are at the core of Future Tech Report.For more information and to view the full slideshow (where the above 4 were originally taken from) of all 12 technologies visit: http://www.mckinsey.com/insights/business_technology/disruptive_technologies

“Walk in to any public space today, from a waiting room to a coffee shop, and note the disturbing absence of voices. We are there, and we are elsewhere. Our discussions are mediated via social networks, and conducted through touchscreen interfaces. Can we call them friends, this network of professional and social contacts we interact with through computers?”

Are we nearing the end of the distinction between our online lives and offline ones? It seems like the pull of our digital worlds is becoming so strong, we cannot drag ourselves away from them. We always wonder what is happening, what are we missing when we are just sitting at the dinner table or waiting on the subway platform? There is almost a level of panic, offline anxiety that we cannot escape. It is almost like the real world is not good enough.

(via shoutsandmumbles)

Studies have shown that online only relationships can be just as powerful as offline only relationships but both together are the strongest.

Other researchers have argued that this is the end of interpersonal vs massmedia communications and that it is all just mass-personal communications. Our communications can now be both hyperpersonal and massmedia simultaneously. This is what’s causing so much stress, we don’t know which is which anymore or how to behave.

(via tacanderson)

(via tacanderson)

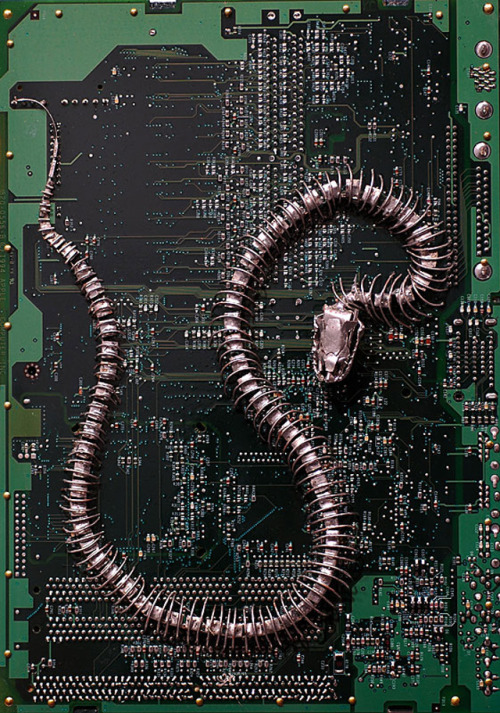

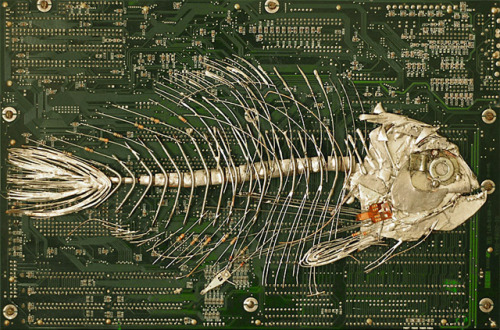

Remnants of the Ancient Circuit BoardsThe found object is an often over-looked tool of inspiration. With a little bit of imagination, any found object can be used to create a work of art that reinterprets ideas of waste, recycling, or the effect our consumerist culture has on the environment.Artist Peter McFarlane uses found objects to create sculptures that mimic Native imagery or, to create circuit board landscapes of our urban jungle. The artist does not limit himself with being selective with his found objects; all materials are used. The use of computer waste is specifically relevant to the artist’s work, because it is one of the most common objects that we throw away now. These man-made electronic devices are becoming our modern-day fossils.And McFarlane sees that. His circuit board fossils can be seen as a response to the way in which we change the history of the world with our waste. Where we once stumbled upon great discoveries of new species and long-dead prehistoric beasts, we are now more likely to dig up circuit boards from 80’s made computers. These pieces are both commentaries on our lifestyles, and how nothing, not even new technology, can withstand the decaying of time.

(via theremina)

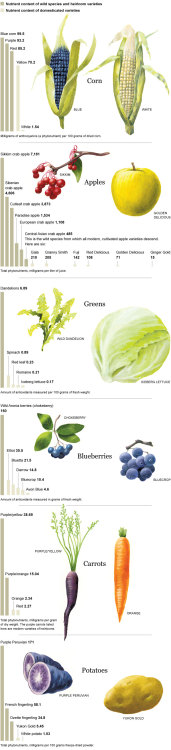

Nutritional Weaklings in the Supermarket

-

Here is how heirloom varieties and wild species of produce have been found, in separate studies, to outshine their cultivated cousins

“Samuel Taylor Coleridge famously declared that experiencing a story—any story—requires the reader’s “willing suspension of disbelief.” In Coleridge’s view, a reader reasons thus: “Yes, I know Coleridge’s bit about the Ancient Mariner is bunk. But in order to enjoy myself, I have to silence my inner skeptic and temporarily believe that the Ancient Mariner is real. Okay, there! Done!”

But as the Philbrick snip illustrates, will has so little do with it. We come in contact with a storyteller who utters a magical incantation (for instance, “once upon a time”) and seizes our attention. If the storyteller is skilled, he simply invades us and takes over. There is little we can do to resist, aside from abruptly clapping the book shut.”

But as the Philbrick snip illustrates, will has so little do with it. We come in contact with a storyteller who utters a magical incantation (for instance, “once upon a time”) and seizes our attention. If the storyteller is skilled, he simply invades us and takes over. There is little we can do to resist, aside from abruptly clapping the book shut.”

Parametric design - by am:Pm

(via bionikbastard)

“

Why bother modeling intelligence? One reason is that intelligence is arguably the most complex and unpredictable thing in our environment and is an important part of what makes up society, which we all have to live with. A big problem with modeling intelligence is that you are trying to use something to understand it, which seems likely to be quite difficult. However, if you can use models of models, ‘meta models’, and understand the characteristics of these, then this becomes possible.

Another reason for understanding intelligence is that society is faced with many problems, and intelligent understanding of these is a critical need. “Work smarter, not harder” is arguably the basis for social success, and being able to add AI (Artificial Intelligence) and AGI (Artificial General Intelligence) into that offers far more possibilities to be smart. A companion AGI may become considered a fundamental human right.

”

MODELING INTELLIGENCE

TR AMAT (PDF)

“In reality, every reader, while he is reading, is the reader of his own self. The writer’s work is merely a kind of optical instrument, which he offers to the reader to permit him to discern what, without the book, he would perhaps never have seen in himself. The reader’s recognition in his own self of what the book says is the proof of its truth.”

(via browsery)

Virus Particles Have More Individuality than Thought

Virus particles of the same type had been thought to have identical structures, like a mass-produced toy, but a new visualization technique developed by a Purdue Univ. researcher revealed otherwise.

Wen Jiang, an associate professor of biological sciences, found that an important viral substructure consisted of a collection of components that could be assembled in different ways, creating differences from particle to particle.

Read more: http://www.laboratoryequipment.com/news/2013/05/virus-particles-have-more-individuality-thought

Where Does Identity Come From?: Scientific American

A fascinating new neuroscience experiment probes an ancient philosophical question—and hints that you might want to get out more

-

Imagine we rewound the tape of your life. Your diplomas are pulled off of walls, unframed, and returned. Your children grow smaller, and then vanish. Soon, you too become smaller. Your adult teeth retract, your baby teeth return, and your traits and foibles start to slip away. Once language goes, you are not so much you as potential you. We keep rewinding still, until we’re halving and halving a colony of cells, finally arriving at that amazing singularity: the cell that will become you.

The question, of course, is what happens when we press “play” again. Are your talents, traits, and insecurities so deeply embedded in your genes that they’re basically inevitable? Or could things go rather differently with just a few tiny nudges? In other words, how much of your fate do you allot to your genes, versus your surroundings, versus chance? This is navel gazing that matters.

See on scientificamerican.com

Why the Internet Sucks You In Like a Black Hole: Scientific American

A lack of structural online boundaries tempts users into spending countless hours on the Web

-

“Checking Facebook should only take a minute.”

Those are the famous last words of countless people every day, right before getting sucked into several hours of watching cat videos, commenting on Instagrammed sushi lunches, and Googling to find out what ever happened to Dolph Lundgren.

If that sounds like you, don’t feel bad: That behavior is natural, given how the Internet is structured, experts say.

People are wired to compulsively seek unpredictable payoffs like those doled out on the Web. And the Internet’s omnipresence and lack of boundaries encourage people to lose track of time, making it hard to exercise the willpower to turn it off.

“The Internet is not addictive in the same way as pharmacological substances are,” said Tom Stafford, a cognitive scientist at the University of Sheffield in the United Kingdom. “But it’s compulsive; it’s compelling; it’s distracting.” [10 Easy Paths to Self Destruction]

See on scientificamerican.com

The End Of The World Isn’t As Likely As Humans Fighting Back

Editor’s NoteThis post is part of Co.Exist’s Futurist Forum, a series of articles by some of the world’s leading futurists about what the world will look like in the near and distant future, and how you can improve how you navigate future scenarios…

-

Scenarios for the future that involve a horrifying end for humanity might make for exciting reading, but they’re the most unlikely of scenarios—and incredibly unhelpful in creating a better tomorrow.

See on fastcoexist.com

Bullish on Wearable Tech: Mary Meeker’s Annual State of the Internet Presentation | Wired Business | Wired.com

Every year (sometimes twice), longtime tech analyst turned venture capitalist Mary Meeker drops her state of the internet presentation. It’s that time of year again, and here it is.

Wildcat2030’s insight:

“wearables, drivables, flyables and scannables.”

See on wired.com

UN mulls ethics of ‘killer robots’

The ethics surrounding so-called killer robots will come under scrutiny as a report is presented to the UN’s Human Rights Council in Geneva.

-

So-called killer robots are due to be discussed at the UN Human Rights Council, meeting in Geneva.

A report presented to the meeting will call for a moratorium on their use while the ethical questions they raise are debated.

The robots are machines programmed in advance to take out people or targets, which - unlike drones - operate autonomously on the battlefield.

They are being developed by the US, UK and Israel, but have not yet been used.

Supporters say the “lethal autonomous robots”, as they are technically known, could save lives, by reducing the number of soldiers on the battlefield.

But human rights groups argue they raise serious moral questions about how we wage war, reports the BBC’s Imogen Foulkes in Geneva.

They include: Who takes the final decision to kill? Can a robot really distinguish between a military target and civilians?

See on bbc.co.uk

What may be the earliest creature yet discovered on the evolutionary line to birds has been unearthed in China.

The fossil animal, which retains impressions of feathers, is dated to be about 160 million years old.

Scientists have given it the name Aurornis, which means “dawn bird”. The significance of the find, they tell Nature magazine, is that it helps simplify not only our understanding for how birds emerged from dinosaurs but also for how powered flight originated.

Aurornis xui, to give it its full name, is preserved in a shale slab pulled from the famous fossil beds of Liaoning Province.

About 50cm tail to beak, the animal has very primitive skeletal features that put it right at the base of the avialans - the group that includes birds and their close relatives since the divergence from dinosaurs. (via BBC News - Archaeopteryx restored in fossil reshuffle)

The bionic man, and what he tells us about the future of being human

-

Rex, as he’s called, has been put together by an expert team for a forthcoming Channel 4 documentary ‘How to Build a Bionic Man’ - in an effort to show just how far prosthetic science has advanced. Tom Clarke reports.

(by Channel4News)

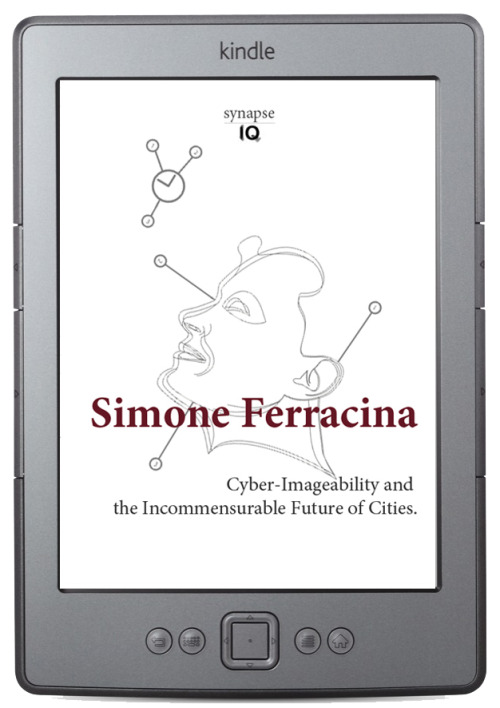

Cyber-Imageability and the Incommensurable Future of Cities..

Simone Ferracina

Synapse Series edited by César Reyes Nájera for dpr-barcelona.Have you ever wondered how one can get up in the middle of the night and move in a pitch-dark room? What makes such nightly excursions possible is the development of a mental image of one’s home that tightly corresponds to the surrounding physical space.But what if one could transfer this awareness from the domestic to the urban sphere? We might then be able to navigate the city with the same confidence that characterizes our domestic movements. While checking-in, liking, sharing and going through Augmented Reality experiences we might be unconsciously contributing to the construction of a nomadic, collective awareness.Simone Ferracina outlines in this essay what it might be like to move inside the Augmented City – a metropolis built of mixed spaces that are continually created by subjects, yet not necessarily readable to them. He describes futuristic landscapes oscillating between bits and atoms, subjectivities and algorithms, anthropocentrism and ecological networks.Welcome to the Augmented City, where you can customize the environment with electronic layers and provoke interactions that might lead to revolutions in obscure, digital autonomous zones.Update your perceptions, and don’t trust the algorithms… start subverting them.» Buy Simone Ferracina’s eBook on Amazon

“Wild dandelions, once a springtime treat for Native Americans, have seven times more phytonutrients than spinach, which we consider a “superfood.” A purple potato native to Peru has 28 times more cancer-fighting anthocyanins than common russet potatoes. One species of apple has a staggering 100 times more phytonutrients than the Golden Delicious displayed in our supermarkets.”

A new robot developed by researchers at Cornell University isn’t only capable of assisting you with tasks, it can accurately predict when you might need a hand. Armed with a Kinect sensor, the robot — developed by Ashutosh Saxena and his team of computer scientists — utilizes a dataset of 120 videos to analyze and understand your movements. It can then help you perform certain tasks including making a meal, stacking or arranging objects, or taking your medicine.

Breeding the Nutrition Out of Our Food

-

WE like the idea that food can be the answer to our ills, that if we eat nutritious foods we won’t need medicine or supplements. We have valued this notion for a long, long time. The Greek physician Hippocrates proclaimed nearly 2,500 years ago: “Let food be thy medicine and medicine be thy food.” Today, medical experts concur. If we heap our plates with fresh fruits and vegetables, they tell us, we will come closer to optimum health.

This health directive needs to be revised. If we want to get maximum health benefits from fruits and vegetables, we must choose the right varieties. Studies published within the past 15 years show that much of our produce is relatively low in phytonutrients, which are the compounds with the potential to reduce the risk of four of our modern scourges: cancer, cardiovascular disease, diabetes and dementia. The loss of these beneficial nutrients did not begin 50 or 100 years ago, as many assume. Unwittingly, we have been stripping phytonutrients from our diet since we stopped foraging for wild plants some 10,000 years ago and became farmers.

These insights have been made possible by new technology that has allowed researchers to compare the phytonutrient content of wild plants with the produce in our supermarkets. The results are startling. (via Breeding the Nutrition Out of Our Food - NYTimes.com)

“No doubt alcohol, tobacco, and so forth, are things that a saint must avoid, but sainthood is also a thing that human beings must avoid.”

(via fabulouslyfreespirited)

“There is an alternative. We reenter time when we accept uncertainty; when we embrace the possibility of surprise; when we question the bindings of tradition and look for novel solutions to novel problems. The prototype for thinking “in time,” Smolin argues, is Darwinian evolution. Natural processes lead to genuinely new organisms, new structures, new complexity, and—here he departs from the thinking of most scientists—new laws of nature. All is subject to change. “Laws are not timeless,” he says. “Like everything else, they are features of the present, and they can evolve over time.” The faith in timeless, universal laws of nature is part of the great appeal of the scientific enterprise. It is a vision of transcendence akin to the belief in eternity that draws people to religion. This view of science claims that the explanations for our world lie in a different place altogether, the world of shadows, or heaven: “another, more perfect world standing apart from everything that we perceive.” But for Smolin this is a dodge, no better than theology or mysticism. Instead, he wants us to consider the possibility that timeless laws of nature are no more real than perfect equilateral triangles. They exist, but only in our minds.”

“As a result of this paradox, we live in a state of alienation from what we most value…. In science, experiments and their analysis are time-bound, as are all our observations of nature, yet we imagine that we uncover evidence for timeless natural laws.”

“We say that time passes, time goes by, and time flows. Those are metaphors. We also think of time as a medium in which we exist. If time is like a river, are we standing on the bank watching, or are we bobbing along? It might be better merely to say that things happen, things change, and time is our name for the reference frame in which we organize our sense that one thing comes before another.”

“So spacetime was born. In spacetime all events are baked together, a four-dimensional continuum. Past and future are no more privileged than left and right or up and down. The time dimension only looks special for the reason Wells mentioned: our consciousness is involved. We have a limited perspective. At any instant we see only a slice of the loaf, a puny three-dimensional cross-section of the whole. For the modern physicist, reality is the whole thing, past and future joined in a single history. The sensation of now is just that, a sensation, and different for everyone. Instead of one master clock, we have clocks in multitudes. And other paraphernalia, too: light cones and world lines and time-like curves and other methods for charting the paths of light and objects through this four-dimensional space. To say that the spacetime view of reality has empowered the physicists of the past century would be an understatement.”

4 ways Google Glass makes us Transhuman (via the Lifeboat Foundation) is a post worth reading wherein augmented reality visors, Google Glass and augmented memory are examined.“Transhumanism is all about the creative and ethical use of technology to better the human condition. Futurists, when discussing topics related to transhumanism, tend to look at nano-tech, bio-mechanical augmentation and related technology that, for the most part, is beyond the comprehension of lay-people.If Transhumanism as a movement is to succeed, we have to explain it’s goals and benefits to humanity by addressing the common-man. After all, transhumanism is not the exclusive domain, nor restricted to the literati, academia or the rich. The more the common man realizes that (s)he is indeed already transhuman in a way — the lesser the taboo associated with the movement and the faster the law of accelerating returns will kick in, leading to eventual Tech Singularity.” - Read on

Principles of locomotion in confined spaces could help robot teams work underground (5/22/2013)

Learning from ants

-

Future teams of subterranean search and rescue robots may owe their success to the lowly fire ant, a much-despised insect whose painful bites and extensive networks of underground tunnels are all-too-familiar to people living in the southern United States.

By studying fire ants in the laboratory using video tracking equipment and X-ray computed tomography, researchers have uncovered fundamental principles of locomotion that robot teams could one day use to travel quickly and easily through underground tunnels. Among the principles is building tunnel environments that assist in moving around by limiting slips and falls, and by reducing the need for complex neural processing.

Among the study’s surprises was the first observation that ants in confined spaces use their antennae for locomotion as well as for sensing the environment.

“Our hypothesis is that the ants are creating their environment in just the right way to allow them to move up and down rapidly with a minimal amount of neural control,” said Dan Goldman, an associate professor in the School of Physics at the Georgia Institute of Technology, and one of the paper’s co-authors. “The environment allows the ants to make missteps and not suffer for them. These ants can teach us some remarkably effective tricks for maneuvering in subterranean environments.”

The research was scheduled to be reported May 20 in the early online edition of the journal Proceedings of the National Academy of Sciences. The work was sponsored by the National Science Foundation’s Physics of Living Systems program.

See on cyberneticsnews.com

*I’m catching the train to Milano this very afternoon.SPEAKER

Big Data Needs a Big Theory to Go with It

As the world becomes increasingly complex and interconnected, some of our biggest challenges have begun to seem intractable. What should we do about uncertainty in the financial markets? How can we predict energy supply and demand? How will climate change play out? How do we cope with rapid urbanization? Our traditional approaches to these problems are often qualitative and disjointed and lead to unintended consequences. To bring scientific rigor to the challenges of our time, we need to develop a deeper understanding of complexity itself.

See on scientificamerican.com

A conceptual and empirical framework for the social distribution of cognition: The case of memory

The power team of Barnier, Sutton, Harris, and Wilson. Paradigms in which human cognition is conceptualised as “embedded”, “distributed”, or “extended” have arisen in different areas of the cognitive sciences in the past 20 years.

See on manwithoutqualities.com

What If Everything Ran Like the Internet?

When the Internet was first starting to catch on in the 1980s, I was invited, as a representative of a large business consulting organization, to a day-long seminar explaining what this new phenomenon was and how businesses should be responding to it. It was led by a man who now makes millions as a social media guru (I won’t embarrass him by identifying him), but at the time he warned that the Internet had no future. The reason, he said, was that it was “anarchic” — there was no management, no control, no way of fixing things quickly if they got “out of hand”. The solution, he said, was for business and government leaders to get together and create an orderly alternative — “Internet 2″ he called it — that would replace the existing Internet when it inevitably imploded. Of course, he couldn’t have been more wrong.

See on howtosavetheworld.ca

Making good

-

Repairing things is about more than thrift. It is about creating something bold and original

-

The 16th-century Japanese tea master Sen no Rikyū is said to have ignored his host’s fine Song Dynasty Chinese tea jar until the owner smashed it in despair at his indifference. After the shards had been painstakingly reassembled by the man’s friends, Rikyū declared: ‘Now, the piece is magnificent.’ So it went in old Japan: when a treasured bowl fell to the floor, one didn’t just sigh and reach for the glue. The old item was gone, but its fracture created the opportunity to make a new one.

Smashed ceramics would be stuck back together with a strong adhesive made from lacquer and rice glue, the web of cracks emphasised with coloured lacquer. Sometimes, the lacquer was mixed or sprinkled with powdered silver or gold and polished with silk so that the joins gleamed; a bowl or container repaired in this way would typically be valued more highly than the original. According to Christy Bartlett, a contemporary tea master based in San Francisco, it is this ‘gap between the vanity of pristine appearance and the fractured manifestation of mortal fate which deepens its appeal’. The mended object is special precisely because it was worth mending. The repair, like that of an old teddy bear, is a testament to the affection in which the object is held.

Go read..

Onstage at the D11 conference in California, Tim Cook responded to a question about Google Glass, noting that wearables are a profound type of technology — and that Apple may be working on its own wearable technology. “It’s an area that’s ripe for exploration,” Cook said, “it’s ripe for us to get excited about. Lots of companies will play in this space.”

(via techspotlight)

(via awarethroughthesenses)

Impossible Limbo - manifest 5, by J.D Doria, 2013

“The internet is ubiquitous, yet its detailed inner workings remain wrapped in mystery. We rely on a wide range of myths, metaphors and mental-models to describe and communicate the network’s abstract concepts and processes. Packets, viruses, worms, trojan horses, crawlers and cookies are all part of this imaginary bestiary of software. This new mythology is one of technological wonders, such as live streams and cloud storage, but also of traps, monsters and malware agents. Folk tales of technology, however abstract and metaphorical, serve as our references and guidelines when it comes to making decisions and protecting ourselves from attacks or dangers. Between educational props and memorabilia, this series of objects visualises and celebrates the abstract bestiary of the internet and acts as a tangible starting point to discuss our relationship to IT technology.”

“It was just amazing to me that you could have a little more or less of some chemical and your whole worldview would be different,” he recalls, smiling with boyish wonder. “If you can switch a chemical and your personality changes, who are you?”

The Inevitable Climate Catastrophe - The Chronicle Review - The Chronicle of Higher Education

Climate change has almost extinguished life on earth on three occasions. Some 250 million years ago, a series of volcanic eruptions in Siberia altered the earth’s atmosphere, wiping out 90 percent of plant and animal species. Next, 65 million years ago, an asteroid struck what is now Mexico and created an atmospheric catastrophe that eliminated 50 percent of the earth’s species (including the dinosaurs). Finally, some 73,000 years ago, the eruption of Mount Toba, in Indonesia, caused a “winter” that lasted several years, apparently killing off most of the human population.

No subsequent environmental disaster has had an impact on that scale, but climate change has caused widespread destruction and dislocation. About 13,000 years ago, the Northern Hemisphere experienced an episode of cooling (probably after a comet collided with the earth) that wiped out most species there. In the 14th century, a combination of climatic oscillations and major epidemics caused severe depopulation and disruption in much of Europe and Asia. In the 17th century, the planet experienced some of the coldest weather recorded in the past millennium. To the English poet John Milton, who experienced these catastrophes, it seemed like “a universe of death.”

Could Time End?: George Musser at TEDxUniTn

-

Could time come to an end? What would that even mean? Last month I gave a talk about this strange physics idea at a TEDx event in Trento, Italy, based on aScientific American article I wrote in 2010. My conceit was that time’s end poses a paradox that might be resolved if time is emergent. I don’t know whether that’s right, and it goes against what Lee Smolin argues in his latest book, but, if nothing else, this idea does provide a loose framework to talk about such physics concepts as the arrow of time and the holographic principle. I’ll also be speaking on this general topic at the Empiricist League, an informal monthly science gathering, in Brooklyn on June 11th.

-

George is a contributing editor for Scientific American magazine in New York since 2012, before he was a Senior Editor since 1998 and the author of The Complete Idiot’s Guide to String Theory. In 2011 he won the American Institute of Physics Science Writing Award and the National Magazine Award, category of General Excellence; in 2010 he won the American Astronomical Society Jonathan Eberhart Planetary Sciences Journalism Award; and in 2003 the National Magazine Award, category of single-topic issue. He did his undergraduate studies in electrical engineering and mathematics at Brown University and his graduate studies in planetary science at Cornell University, where he was a National Science Foundation Graduate Fellow.

(by TEDxTalks)

Novelty and the Brain: Why New Things Make Us Feel So Good

We all like shiny new things, whether it’s a new gadget, new city, or new job. In fact, our brains are made to be attracted to novelty—and it turns out that it could actually improve our memory and learning capacity. The team at social sharing app Buffer explains how.Full Story: LifeHacker

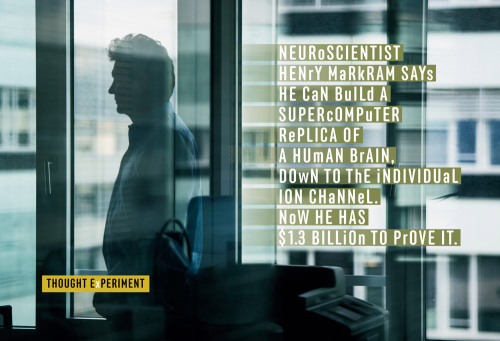

Even by the standards of the TED conference, Henry Markram’s 2009 TEDGlobal talk was a mind-bender. He took the stage of the Oxford Playhouse, clad in the requisite dress shirt and blue jeans, and announced a plan that—if it panned out—would deliver a fully sentient hologram within a decade. He dedicated himself to wiping out all mental disorders and creating a self-aware artificial intelligence. And the South African–born neuroscientist pronounced that he would accomplish all this through an insanely ambitious attempt to build a complete model of a human brain—from synapses to hemispheres—and simulate it on a supercomputer. Markram was proposing a project that has bedeviled AI researchers for decades, that most had presumed was impossible. He wanted to build a working mind from the ground up.

In the four years since Markram’s speech, he hasn’t backed off a nanometer. The self-assured scientist claims that the only thing preventing scientists from understanding the human brain in its entirety—from the molecular level all the way to the mystery of consciousness—is a lack of ambition. If only neuroscience would follow his lead, he insists, his Human Brain Project could simulate the functions of all 86 billion neurons in the human brain, and the 100 trillion connections that link them. And once that’s done, once you’ve built a plug-and-play brain, anything is possible. You could take it apart to figure out the causes of brain diseases. You could rig it to robotics and develop a whole new range of intelligent technologies. You could strap on a pair of virtual reality glasses and experience a brain other than your own. (via The $1.3B Quest to Build a Supercomputer Replica of a Human Brain | Wired Science | Wired.com)

Electroceuticals: swapping drugs for devices

Bioelectronics is the field of developing medicines that use electrical impulses to modulate the body’s neural circuits as an alternative to drug-based interventions. How far away are we from having these very targeted “electroceuticals”?

See on wired.co.uk

Should salt be kept under lock and key?Excess sodium prematurely kills as many as 150,000 people in the US each year

Salt levels in processed and fast foods remain dangerously high, despite calls for the food industry to voluntarily reduce sodium levels, new research shows.

For a study, researchers assessed the sodium content in selected processed foods and in fast-food restaurants in 2005, 2008, and 2011 and found sodium content is as high as ever.

“The voluntary approach has failed,” says Stephen Havas, professor of preventive medicine at Northwestern University Feinberg School of Medicine.

“The study demonstrates that the food industry has been dragging its feet and making very few changes. This issue will not go away unless the government steps in to protect the public. The amount of sodium in our food supply needs to be regulated.”

Excess sodium prematurely kills as many as 150,000 people in the US each year. About 90 percent of the US population develops high blood pressure and high salt in the diet is a major cause. High blood pressure increases the risk of developing heart attacks and strokes, often resulting in death or disability.

“High salt content in food benefits the food industry,” Havas says. “High salt masks the flavor of ingredients that are often not the best quality and also stimulates people to drink more soda and alcohol, which the industry profits from.”

A typical American consumes an average of almost two teaspoons a day of salt, vastly higher than the recommended amount of three-fifths of a teaspoon or no more than 1,500 milligrams, as recommended by the American Heart Association. About 80 percent of our daily sodium consumption comes from eating processed or restaurant foods. Very little comes from salt we add to food.

“The only way for most people to meet the current sodium recommendation is to cook from scratch and not use salt,” Havas says. “But that’s not realistic for most people.”

See on futurity.org

“Philosophy Isn’t Dead Yet:

…there could not be a worse time for philosophers to surrender the baton of metaphysical inquiry to physicists. Fundamental physics is in a metaphysical mess and needs help.

The attempt to reconcile its two big theories, general relativity and quantum mechanics, has stalled for nearly 40 years. Endeavours to unite them, such as string theory, are mathematically ingenious but incomprehensible even to many who work with them. This is well known. A better-kept secret is that at the heart of quantum mechanics is a disturbing paradox – the so-called measurement problem, arising ultimately out of the Uncertainty Principle – which apparently demonstrates that the very measurements that have established and confirmed quantum theory should be impossible.

Beyond these domestic problems there is the failure of physics to accommodate conscious beings. The attempt to fit consciousness into the material world, usually by identifying it with activity in the brain, has failed dismally, if only because there is no way of accounting for the fact that certain nerve impulses are supposed to be conscious (of themselves or of the world) while the overwhelming majority (physically essentially the same) are not. In short, physics does not allow for the strange fact that matter reveals itself to material objects (such as physicists).”

…there could not be a worse time for philosophers to surrender the baton of metaphysical inquiry to physicists. Fundamental physics is in a metaphysical mess and needs help.

The attempt to reconcile its two big theories, general relativity and quantum mechanics, has stalled for nearly 40 years. Endeavours to unite them, such as string theory, are mathematically ingenious but incomprehensible even to many who work with them. This is well known. A better-kept secret is that at the heart of quantum mechanics is a disturbing paradox – the so-called measurement problem, arising ultimately out of the Uncertainty Principle – which apparently demonstrates that the very measurements that have established and confirmed quantum theory should be impossible.

Beyond these domestic problems there is the failure of physics to accommodate conscious beings. The attempt to fit consciousness into the material world, usually by identifying it with activity in the brain, has failed dismally, if only because there is no way of accounting for the fact that certain nerve impulses are supposed to be conscious (of themselves or of the world) while the overwhelming majority (physically essentially the same) are not. In short, physics does not allow for the strange fact that matter reveals itself to material objects (such as physicists).”

“The world might be, as Ludwig Wittgenstein said, everything that is the case — but the case is bigger than it was: the number of things available for our regard has increased beyond belief”

“Facebook is the living dead: the most popular, least relevant social network where teenagers and adults alike gather out of fear of missing out on things that don’t even make them happy”

Anyone Can Be a Mechanic With This Brilliant Augmented Reality App

Have you ever had a problem with your car, but were left completely and utterly bewildered when you popped the hood in hopes of fixing it yourself?

“Security and privacy are not differentiators in most users’ minds because they are focused elsewhere. Users have strong opinions about wanting to be safe, but pointedly addressing the issue with them brings up strong negative emotions. As Ben Adida, Director of Identity at Mozilla puts it, “Security is extremely important, but it is not the selling point”

FYI: Can A Bionic Eye See As Well As A Human Eye?

It’s the difference between a grainy black-and-white film and HD.Previously blind patients who receive the recently FDA-approved Argus II bionic eye system will regain some degree of functional sight. The retinal implant technology, developed and distributed by Second Sight, can improve quality of life for patients who have lost functional vision due to retinitis pigmentosa, a disease that causes retinal cells to die. But the implant doesn’t facilitate a sudden recovery of 20/20 vision.

(via howstuffworks)

“

“There is no citizen of the world who can be complacent about this,” said Janos Bogardy, director of the UN University’s Institute for Environment and Human Security.

On Wednesday, UN secretary general, Ban Ki-moon, added his voice to concerns about water security: “We live in an increasingly water insecure world where demand often outstrips supply and where water quality often fails to meet minimum standards. Under current trends, future demands for water will not be met,” he said.

The scientists, meeting in Bonn this week, called on politicians to include tough new targets on improving water in the sustainable development goals that will be introduced when the current millennium development goals expire in 2015. They want governments to introduce water management systems that will address the problems of pollution, over-use, wastage and climate change.

”Global majority faces water shortages ‘within two generations’

Experts call on governments to start conserving water in face of climate change, pollution and over-use

-

The majority of the 9 billion people on Earth will live with severe pressure on fresh water within the space of two generations as climate change, pollution and over-use of resources take their toll, 500 scientists have warned.

The world’s water systems would soon reach a tipping point that “could trigger irreversible change with potentially catastrophic consequences”, more than 500 water experts warned on Friday as they called on governments to start conserving the vital resource. They said it was wrong to see fresh water as an endlessly renewable resource because, in many cases, people are pumping out water from underground sources at such a rate that it will not be restored within several lifetimes.

“These are self-inflicted wounds,” said Charles Vörösmarty, a professor at the Cooperative Remote Sensing Science and Technology Centre. “We have discovered tipping points in the system. Already, there are 1 billion people relying on ground water supplies that are simply not there as renewable water supplies.”

A majority of the population – about 4.5 billion people globally – already live within 50km of an “impaired” water resource – one that is running dry, or polluted. If these trends continue, millions more will see the water on which they depend running out or so filthy that it no longer supports life.

See on guardian.co.uk

Mapping the Great Indoors

-

On a sunny Wednesday, with a faint haze hanging over the Rockies, Noah Fierer eyed the field site from the back of his colleague’s Ford Explorer. Two blocks east of a strip mall in Longmont, one of the world’s last underexplored ecosystems had come into view: a sandstone-colored ranch house, code-named Q. A pair of dogs barked in the backyard.

Dr. Fierer, 39, a microbiologist at the University of Colorado Boulder and self-described “natural historian of cooties,” walked across the front lawn and into the house, joining a team of researchers inside. One swabbed surfaces with sterile cotton swabs. Others logged the findings from two humming air samplers: clothing fibers, dog hair, skin flakes, particulate matter and microbial life.

Ecologists like Dr. Fierer have begun peering into an intimate, overlooked world that barely existed 100,000 years ago: the great indoors. They want to know what lives in our homes with us and how we “colonize” spaces with other species — viruses, bacteria, microbes. Homes, they’ve found, contain identifiable ecological signatures of their human inhabitants. Even dogs exert a significant influence on the tiny life-forms living on our pillows and television screens. Once ecologists have more thoroughly identified indoor species, they hope to come up with strategies to scientifically manage homes, by eliminating harmful taxa and fostering species beneficial to our health. (via Getting to Know Our Microbial Roommates - NYTimes.com)

Mr. Know-It-All on Whether You Own Your Kindle Books and How to Nab Free Journal Articles | Wired.com

Mr. Know-It-All answers your questions about Kindle books, the carbon footprint of online shopping and the ethics of reading journal articles for free.

-

Is it true that I don’t really own the books I purchase for my Kindle?

If convenient euphemisms could somehow be outlawed, the “Buy now with 1-Click” button on Kindle pages would have to be relabeled “License now with 1-Click.” Amazon’s terms of service clearly state that, unlike those bulky slabs of arboreal matter that imparted knowledge to generations past, Kindle books can never be owned in the traditional sense. Instead, your $12.99 merely earns you the right to view the work on your Kindle. This arrangement gives Amazon the authority to snatch back that content if the company thinks you’ve been naughty—say, by copying and distributing ebooks or by engaging in fraud with your account.

Look at it from Amazon’s perspective: Part of the rationale for letting you resell old-school books is that you can do so only once—after the transaction is complete, the physical book is, by definition, no longer in your possession. That’s not necessarily the case with ebooks, which can be duplicated with ease. If Amazon grants its customers true ownership of Kindle books, it will have no quick recourse against scoundrels who resell books multiple times without deleting the original. Wiping someone’s Kindle stash is a lot easier than filing a lawsuit.

But there’s still something outrageous and infuriating about the situation. If Jeff Bezos showed up at your door and said he wanted to repossess your books, would you let him in? No, you’d unleash your hounds. And then hope that one of the dogs got ahold of his wallet.

See on wired.com

“

The core problem starts with philosophy. The owners of the biggest computers like to think about them as big artificial brains. But actually they are simply repackaging valuable information gathered from everyone else.

This is what “big data” means.

”

Sell your data to save the economy and your future

-

Imagine our world later in this century, when machines have got better.

Cars and trucks drive themselves, and there’s hardly ever an accident. Robots root through the earth for raw materials, and miners are never trapped. Robotic surgeons rarely make errors.

Clothes are always brand new designs that day, and always fit perfectly, because your home fabricator makes them out of recycled clothes from the previous day. There is no laundry.

I can’t tell you which of these technologies will start to work in this century for sure, and which will be derailed by glitches, but at least some of these things will come about. (via BBC News - Sell your data to save the economy and your future)

Imagine a Maasai warrior, or a Maasai woman adorned with beads - it’s one of the most powerful images of tribal Africa. Dozens of companies use it to sell products - but Maasai elders are now considering seeking protection for their “brand”.

Dressed in smart white checked shirt and grey sweater, you’d hardly know Isaac ole Tialolo is Maasai.

The large round holes in his ears - where his jewellery sometimes sits - might be a clue, though.

Isaac is a Maasai leader and elder. Back home in the mountains near Naivasha, in southern Kenya, he lives a semi-nomadic life, herding sheep, goats, and - most importantly - cattle.

But Isaac is also chair of a new organisation, the Maasai Intellectual Property Initiative, and it’s a project that’s beginning to take him around the world - including, most recently, London.

“We all know that we have been exploited by people who just come around, take our pictures and benefit from it,” he says.

“We have been exploited by so many things you cannot imagine.”

Crunch time for Isaac came about 20 years ago, when a tourist took a photo of him, without asking permission - something the Maasai, are particularly sensitive about.

“We believed that if somebody takes your photograph, he has already taken your blood,” he explains.

Isaac was so furious that he smashed the tourist’s camera. Twenty years later, he is mild-mannered and impeccably turned out - but equally passionate about what he sees as the use, and abuse, of his culture.

“I think people need to understand the culture of the others and respect it,” he says.

“You should not use it to your own benefit, leaving the community - or the owner of the culture - without anything.” (via BBC News - Brand Maasai: Why nomads might trademark their name)

“If our hope is to describe the world fully, a place is necessary for preferable, ineffable, metaphorical, primary process, concrete-experience, intuitive and esthetic types of cognition, for there are certain aspects of reality which can be cognized in no other way.”

“

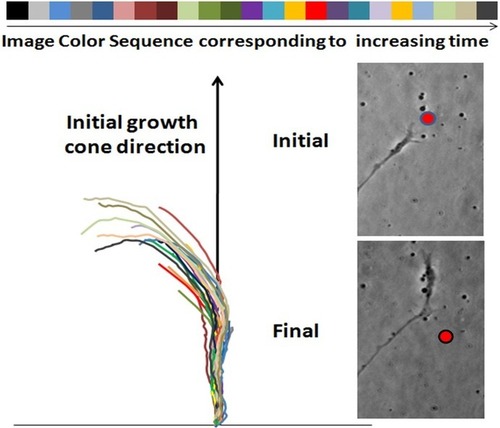

People with high IQ scores aren’t just more intelligent - they also process sensory information differently, according to new study. Scientists discovered that the brains of people with high IQ are automatically more selective when it comes to perceiving moving objects, meaning that they are more likely to suppress larger and less relevant background motion. ‘It is not that people with high IQ are simply better at visual perception,’ said Duje Tadin of the University of Rochester. ‘Instead, their visual perception is more discriminating.’

‘They excel at seeing small, moving objects but struggle in perceiving large, background-like motions.’

The discovery was made by asking people to watch videos showing moving bars on a computer screen.

Their task was to state whether the bars were moving to the left or to the right.

In other words, it isn’t a conscious strategy but rather something automatic and fundamentally different about the way these people’s brains work.

The ability to block out distraction is very useful in a world filled with more information than we can possibly take in.

It helps to explain what makes some brains more efficient than others. An efficient brain ‘has to be picky’ Tadin said.

”

(via myserendipities)

Home Tweet Home: A House with Its Own Voice on Twitter

As weird as a Twitter-enabled house might seem, it offers a glimpse of the future. As interest grows in the “Internet of things”—the idea of adding network connectivity to all sorts of normal objects—everything from desk lamps to ovens may soon come Internet-ready.

Read more: http://www.technologyreview.com/news/514941/home-tweet-home-a-house-with-its-own-voice-on-twitter/

Read of the day: I type, therefore I am

-

More human beings can write and type their every thought than ever before in history. Something to celebrate or deplore?

-

At some point in the past two million years, give or take half a million, the genus of great apes that would become modern humans crossed a unique threshold. Across unknowable reaches of time, they developed a communication system able to describe not only the world, but the inner lives of its speakers. They ascended — or fell, depending on your preferred metaphor — into language.

The vast bulk of that story is silence. Indeed, darkness and silence are the defining norms of human history. The earliest known writing probably emerged in southern Mesopotamia around 5,000 years ago but, for most of recorded history, reading and writing remained among the most elite human activities: the province of monarchs, priests and nobles who reserved for themselves the privilege of lasting words. (via Tom Chatfield – Language and digital identity)

go read this..

“I used to believe in the essential unreality of time. Indeed, I went into physics because as an adolescent I yearned to exchange the time-bound, human world, which I saw as ugly and inhospitable, for a world of pure, timeless truth…. I no longer believe that time is unreal. In fact I have swung to the opposite view: Not only is time real, but nothing we know or experience gets closer to the heart of nature than the reality of time.”

Lee Smolin

Time Regained! — www.nybooks.com — Readability

“

We are sure only in our verbal universe.

The philosophers’ originality comes down to inventing terms. Since there are only three or four attitudes by which to confront the world—and about as many ways of dying—the nuances which multiply and diversify them derive from no more than the choice of words, bereft of any metaphysical range.

We are engulfed in a pleonastic universe, in which the questions and answers amount to the same thing.

”Stroke stem-cell trial shows promise

Five severely disabled stroke patients show signs of recovery following the injection of stem cells into their brain.Prof Keith Muir, of Glasgow University, who is treating them, says he is “surprised” by the mild to moderate improvements in the five patients.He stresses it is too soon to tell whether the effect is due to the treatment they are receiving.

The results will be presented at the European Stroke Conference in London.

Commenting on the research, Dr Clare Walton of the Stroke Association said: “The use of stem cells is a promising technique which could help to reverse some of the disabling effects of stroke. We are very excited about this trial; however, we are currently at the beginning of a very long road and significant further development is needed before stem cell therapy can be regarded as a possible treatment.”

The stem cells were created 10 years ago from one sample of nerve tissue taken from a foetus. The company that produces the stem cells, Reneuron, is able to manufacture as many stem cells as it needs from that original sample.

It is because a foetal tissue sample was involved in the development of the treatment that it has its critics.

Among them is anti-abortion campaigner Lord Alton. “The bottom line is surely that the true donor (the foetus) could not possibly have given consent and that, of course, raises significant ethical considerations,” he said.

Reneuron says the trial - which it funded - has ethical approval from the medicine’s regulator. It added that one tissue sample was used in development 10 years ago and that foetal material has not been used since.

See on bbc.co.uk

“In relativity, movement is continuous, causally determinate and well defined, while in quantum mechanics it is discontinuous, not causally determinate and not well defined.”

(via scienceisbeauty)

“I never saw a wild thing sorry for itself. A small bird will drop frozen dead from a bough without ever having felt sorry for itself.”

(via largerloves)

“Philosophy, “a branch of fantastic literature”, a genre that, like poetry, aims to expand the imagination rather than to demonstrate or persuade.”

Growing new brains with infrared light [exclusive] | KurzweilAI

Illustration of the neuronal beacon for guiding axon growth direction (credit: B. Black et al./Optics Letters) University of Texas Arlington scientists

See on kurzweilai.net

Do salamanders hold the solution to regeneration? | KurzweilAI

Salamander (credit: Scott Camazine/Wikimedia Commons) Salamanders’ immune systems are key to their remarkable ability to regrow limbs, and could also

See on kurzweilai.net

As the human body fine-tunes its neurological wiring, nerve cells often must fix a faulty connection by amputating an axon — the “business end” of the neuron that sends electrical impulses to tissues or other neurons. It is a dance with death, however, because the molecular poison the neuron deploys to sever an axon could, if uncontained, kill the entire cell.Researchers from the University of North Carolina School of Medicine have uncovered some surprising insights about the process of axon amputation, or “pruning,” in a study published May 21 in the journal Nature Communications. Axon pruning has mystified scientists curious to know how a neuron can unleash a self-destruct mechanism within its axon, but keep it from spreading to the rest of the cell. The researchers’ findings could offer clues about the processes underlying some neurological disorders.“Aberrant axon pruning is thought to underlie some of the causes for neurodevelopmental disorders, such as schizophrenia and autism,” said Mohanish Deshmukh, PhD, professor of cell biology and physiology at UNC and the study’s senior author. “This study sheds light on some of the mechanisms by which neurons are able to regulate axon pruning.”Axon pruning is part of normal development and plays a key role in learning and memory. Another important process, apoptosis — the purposeful death of an entire cell — is also crucial because it allows the body to cull broken or incorrectly placed neurons. But both processes have been linked with disease when improperly regulated.The research team placed mouse neurons in special devices called microfluidic chambers that allowed the researchers to independently manipulate the environments surrounding the axon and cell body to induce axon pruning or apoptosis.They found that although the nerve cell uses the same poison — a group of molecules known as Caspases — whether it intends to kill the whole cell or just the axon, it deploys the Caspases in a different way depending on the context.“People had assumed that the mechanism was the same regardless of whether the context was axon pruning or apoptosis, but we found that it’s actually quite distinct,” said Deshmukh. “The neuron essentially uses the same components for both cases, but tweaks them in a very elegant way so the neuron knows whether it needs to undergo apoptosis or axon pruning.”In apoptosis, the neuron deploys the deadly Caspases using an activator known as Apaf-1. In the case of axon pruning, Apaf-1 was simply not involved, despite the presence of Caspases. “This is really going to take the field by surprise,” said Deshmukh. “There’s very little precedent of Caspases being activated without Apaf-1. We just didn’t know they could be activated through a different mechanism.”In addition, the team discovered that neurons employ other molecules as safety brakes to keep the “kill” signal contained to the axon alone. “Having this brake keeps that signal from spreading to the rest of the body,” said Deshmukh. “Remarkably, just removing one brake makes the neurons more vulnerable.”Deshmukh said the findings offer a glimpse into how nerve cells reconfigure themselves during development and beyond. Enhancing our understanding of these basic processes could help illuminate what has gone wrong in the case of some neurological disorders.